No known key found for this signature in database

GPG Key ID: 4AEE18F83AFDEB23

100 changed files with 3938 additions and 2128 deletions

Split View

Diff Options

-

+3 -0.gitignore

-

+1 -1.travis.yml

-

+27 -0CHANGELOG.md

-

+961 -806Cargo.lock

-

+1 -1Cargo.toml

-

+2 -0EXAMPLES.md

-

+1 -1README.md

-

+1 -1appveyor.yml

-

+1 -0components/config/Cargo.toml

-

+50 -11components/config/src/config.rs

-

+6 -4components/config/src/lib.rs

-

+8 -12components/config/src/theme.rs

-

+2 -3components/errors/Cargo.toml

-

+95 -13components/errors/src/lib.rs

-

+1 -1components/front_matter/Cargo.toml

-

+11 -5components/front_matter/src/lib.rs

-

+2 -2components/imageproc/Cargo.toml

-

+98 -32components/imageproc/src/lib.rs

-

+1 -1components/library/Cargo.toml

-

+152 -14components/library/src/content/file_info.rs

-

+182 -31components/library/src/content/page.rs

-

+92 -15components/library/src/content/section.rs

-

+68 -8components/library/src/content/ser.rs

-

+81 -23components/library/src/library.rs

-

+6 -5components/library/src/pagination/mod.rs

-

+3 -2components/library/src/sorting.rs

-

+183 -17components/library/src/taxonomies/mod.rs

-

+80 -55components/rebuild/src/lib.rs

-

+51 -12components/rebuild/tests/rebuild.rs

-

+2 -2components/rendering/Cargo.toml

-

+200 -166components/rendering/src/markdown.rs

-

+14 -15components/rendering/src/shortcode.rs

-

+49 -149components/rendering/src/table_of_contents.rs

-

+95 -5components/rendering/tests/markdown.rs

-

+1 -1components/search/Cargo.toml

-

+1 -1components/site/Cargo.toml

-

+1 -0components/site/benches/gen.py

-

+4 -3components/site/benches/site.rs

-

+271 -197components/site/src/lib.rs

-

+127 -0components/site/src/sitemap.rs

-

+69 -0components/site/tests/common.rs

-

+131 -187components/site/tests/site.rs

-

+141 -0components/site/tests/site_i18n.rs

-

+1 -2components/templates/Cargo.toml

-

+1 -0components/templates/src/builtins/robots.txt

-

+4 -16components/templates/src/builtins/sitemap.xml

-

+7 -0components/templates/src/builtins/split_sitemap_index.xml

-

+12 -12components/templates/src/filters.rs

-

+134 -66components/templates/src/global_fns/load_data.rs

-

+228 -162components/templates/src/global_fns/mod.rs

-

+25 -12components/templates/src/lib.rs

-

+1 -1components/utils/Cargo.toml

-

+20 -5components/utils/src/fs.rs

-

+1 -0components/utils/src/lib.rs

-

+14 -3components/utils/src/templates.rs

-

+44 -0components/utils/src/vec.rs

-

+1 -0components/utils/test-files/test.css

-

+4 -0components/utils/test-files/uneven_rows.csv

-

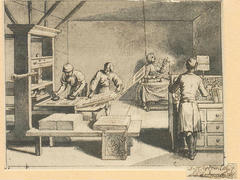

BINdocs/content/documentation/content/image-processing/01-zola.png

-

BINdocs/content/documentation/content/image-processing/02-zola-manet.png

-

BINdocs/content/documentation/content/image-processing/03-zola-cezanne.png

-

+0 -0docs/content/documentation/content/image-processing/04-gutenberg.jpg

-

+0 -0docs/content/documentation/content/image-processing/05-example.jpg

-

+0 -0docs/content/documentation/content/image-processing/06-example.jpg

-

+0 -0docs/content/documentation/content/image-processing/07-example.jpg

-

+0 -0docs/content/documentation/content/image-processing/08-example.jpg

-

+29 -16docs/content/documentation/content/image-processing/index.md

-

+37 -0docs/content/documentation/content/multilingual.md

-

+2 -2docs/content/documentation/content/page.md

-

+4 -0docs/content/documentation/content/shortcodes.md

-

+1 -0docs/content/documentation/content/syntax-highlighting.md

-

+2 -1docs/content/documentation/content/taxonomies.md

-

+7 -0docs/content/documentation/deployment/github-pages.md

-

+12 -1docs/content/documentation/getting-started/cli-usage.md

-

+11 -1docs/content/documentation/getting-started/configuration.md

-

+1 -1docs/content/documentation/getting-started/installation.md

-

+16 -10docs/content/documentation/templates/overview.md

-

+27 -7docs/content/documentation/templates/pages-sections.md

-

+16 -8docs/content/documentation/templates/sitemap.md

-

BINdocs/static/processed_images/0478482c742970ac00.jpg

-

BINdocs/static/processed_images/1794115ed20fc20b00.jpg

-

BINdocs/static/processed_images/1cec18975099962e00.png

-

BINdocs/static/processed_images/2b6a3e5a28bab1f100.jpg

-

BINdocs/static/processed_images/3dba59a146f3bc0900.jpg

-

BINdocs/static/processed_images/4c2ee08a8b7c98fd00.png

-

BINdocs/static/processed_images/5e399fa94c88057a00.jpg

-

BINdocs/static/processed_images/60097aeed903cf3b00.png

-

BINdocs/static/processed_images/60327c08d512e16800.png

-

BINdocs/static/processed_images/63d5c27341a9885c00.jpg

-

BINdocs/static/processed_images/63fe884d13fd318d00.jpg

-

BINdocs/static/processed_images/67f2ebdd806283e900.jpg

-

BINdocs/static/processed_images/70513837257b310c00.jpg

-

BINdocs/static/processed_images/7459e23e962c9d2f00.png

-

BINdocs/static/processed_images/8b446e542d0b692d00.jpg

-

BINdocs/static/processed_images/a9f5475850972f8500.png

-

BINdocs/static/processed_images/ab39b603591b3e3300.jpg

-

BINdocs/static/processed_images/aebd0f00cf9232d000.jpg

-

BINdocs/static/processed_images/baf5a4139772f2c700.png

-

BINdocs/static/processed_images/d364fb703e1e0b3200.jpg

-

BINdocs/static/processed_images/d91d0751df06edce00.jpg

+ 3

- 0

.gitignore

View File

| @@ -1,18 +1,21 @@ | |||

| target | |||

| .idea/ | |||

| test_site/public | |||

| test_site_i18n/public | |||

| docs/public | |||

| small-blog | |||

| medium-blog | |||

| big-blog | |||

| huge-blog | |||

| extra-huge-blog | |||

| small-kb | |||

| medium-kb | |||

| huge-kb | |||

| current.bench | |||

| now.bench | |||

| *.zst | |||

| # snapcraft artifacts | |||

| snap/.snapcraft | |||

+ 1

- 1

.travis.yml

View File

| @@ -16,7 +16,7 @@ matrix: | |||

| # The earliest stable Rust version that works | |||

| - env: TARGET=x86_64-unknown-linux-gnu | |||

| rust: 1.30.0 | |||

| rust: 1.31.0 | |||

| before_install: set -e | |||

+ 27

- 0

CHANGELOG.md

View File

| @@ -1,5 +1,32 @@ | |||

| # Changelog | |||

| ## 0.6.0 (unreleased) | |||

| ### Breaking | |||

| - `earlier/later` and `lighter/heavier` are not set anymore on pages when rendering | |||

| a section | |||

| - The table of content for a page/section is now only available as the `toc` variable when | |||

| rendering it and not anymore on the `page`/`section` variable | |||

| - Default directory for `load_data` is now the root of the site instead of the `content` directory | |||

| - Change variable sent to the sitemap template, see documentation for details | |||

| ### Other | |||

| - Add support for content in multiple languages | |||

| - Lower latency on serve before rebuilding from 2 to 1 second | |||

| - Allow processing PNG and produced images are less blurry | |||

| - Add an id (`zola-continue-reading`) to the paragraph generated after a summary | |||

| - Add Dracula syntax highlighting theme | |||

| - Fix using inline styles in headers | |||

| - Fix sections with render=false being shown in sitemap | |||

| - Sitemap is now split when there are more than 30 000 links in it | |||

| - Add link to sitemap in robots.txt | |||

| - Markdown rendering is now fully CommonMark compliant | |||

| - `load_data` now defaults to loading file as plain text, unless `format` is passed | |||

| or the extension matches csv/toml/json | |||

| - Sitemap entries get an additional `extra` field for pages only | |||

| - Add a `base-path` command line option to `build` and `serve` | |||

| ## 0.5.1 (2018-12-14) | |||

| - Fix deleting markdown file in `zola serve` | |||

+ 961

- 806

Cargo.lock

File diff suppressed because it is too large

View File

+ 1

- 1

Cargo.toml

View File

| @@ -1,6 +1,6 @@ | |||

| [package] | |||

| name = "zola" | |||

| version = "0.5.1" | |||

| version = "0.6.0" | |||

| authors = ["Vincent Prouillet <prouillet.vincent@gmail.com>"] | |||

| license = "MIT" | |||

| readme = "README.md" | |||

+ 2

- 0

EXAMPLES.md

View File

| @@ -19,5 +19,7 @@ | |||

| | [Daniel Sockwell's codesections.com](https://www.codesections.com) | https://gitlab.com/codesections/codesections-website | | |||

| | [Jens Getreu's blog](https://blog.getreu.net) | | | |||

| | [Matthias Endler](https://matthias-endler.de) | https://github.com/mre/mre.github.io | | |||

| | [Michael Plotke](https://michael.plotke.me) | https://gitlab.com/bdjnk/michael | | |||

| | [shaleenjain.com](https://shaleenjain.com) | https://github.com/shalzz/shalzz.github.io | | |||

| | [Hello, Rust!](https://hello-rust.show) | https://github.com/hello-rust/hello-rust.github.io | | |||

| | [maxdeviant.com](https://maxdeviant.com/) | | | |||

+ 1

- 1

README.md

View File

| @@ -16,7 +16,7 @@ in the `docs/content` folder of the repository and the community can use [its fo | |||

| | Syntax highlighting | ✔ | ✔ | ✔ | ✔ | | |||

| | Sass compilation | ✔ | ✔ | ✔ | ✔ | | |||

| | Assets co-location | ✔ | ✔ | ✔ | ✔ | | |||

| | i18n | ✕ | ✕ | ✔ | ✔ | | |||

| | Multilingual site | ✔ | ✕ | ✔ | ✔ | | |||

| | Image processing | ✔ | ✕ | ✔ | ✔ | | |||

| | Sane & powerful template engine | ✔ | ~ | ~ | ✔ | | |||

| | Themes | ✔ | ✕ | ✔ | ✔ | | |||

+ 1

- 1

appveyor.yml

View File

| @@ -10,7 +10,7 @@ environment: | |||

| matrix: | |||

| - target: x86_64-pc-windows-msvc | |||

| RUST_VERSION: 1.29.0 | |||

| RUST_VERSION: 1.31.0 | |||

| - target: x86_64-pc-windows-msvc | |||

| RUST_VERSION: stable | |||

+ 1

- 0

components/config/Cargo.toml

View File

| @@ -13,3 +13,4 @@ lazy_static = "1" | |||

| syntect = "3" | |||

| errors = { path = "../errors" } | |||

| utils = { path = "../utils" } | |||

+ 50

- 11

components/config/src/config.rs

View File

| @@ -1,6 +1,4 @@ | |||

| use std::collections::HashMap; | |||

| use std::fs::File; | |||

| use std::io::prelude::*; | |||

| use std::path::{Path, PathBuf}; | |||

| use chrono::Utc; | |||

| @@ -9,13 +7,29 @@ use syntect::parsing::{SyntaxSet, SyntaxSetBuilder}; | |||

| use toml; | |||

| use toml::Value as Toml; | |||

| use errors::{Result, ResultExt}; | |||

| use errors::Result; | |||

| use highlighting::THEME_SET; | |||

| use theme::Theme; | |||

| use utils::fs::read_file_with_error; | |||

| // We want a default base url for tests | |||

| static DEFAULT_BASE_URL: &'static str = "http://a-website.com"; | |||

| #[derive(Clone, Debug, PartialEq, Eq, Serialize, Deserialize)] | |||

| #[serde(default)] | |||

| pub struct Language { | |||

| /// The language code | |||

| pub code: String, | |||

| /// Whether to generate a RSS feed for that language, defaults to `false` | |||

| pub rss: bool, | |||

| } | |||

| impl Default for Language { | |||

| fn default() -> Language { | |||

| Language { code: String::new(), rss: false } | |||

| } | |||

| } | |||

| #[derive(Clone, Debug, PartialEq, Eq, Serialize, Deserialize)] | |||

| #[serde(default)] | |||

| pub struct Taxonomy { | |||

| @@ -27,6 +41,9 @@ pub struct Taxonomy { | |||

| pub paginate_path: Option<String>, | |||

| /// Whether to generate a RSS feed only for each taxonomy term, defaults to false | |||

| pub rss: bool, | |||

| /// The language for that taxonomy, only used in multilingual sites. | |||

| /// Defaults to the config `default_language` if not set | |||

| pub lang: String, | |||

| } | |||

| impl Taxonomy { | |||

| @@ -49,7 +66,13 @@ impl Taxonomy { | |||

| impl Default for Taxonomy { | |||

| fn default() -> Taxonomy { | |||

| Taxonomy { name: String::new(), paginate_by: None, paginate_path: None, rss: false } | |||

| Taxonomy { | |||

| name: String::new(), | |||

| paginate_by: None, | |||

| paginate_path: None, | |||

| rss: false, | |||

| lang: String::new(), | |||

| } | |||

| } | |||

| } | |||

| @@ -68,6 +91,8 @@ pub struct Config { | |||

| /// The language used in the site. Defaults to "en" | |||

| pub default_language: String, | |||

| /// The list of supported languages outside of the default one | |||

| pub languages: Vec<Language>, | |||

| /// Languages list and translated strings | |||

| pub translations: HashMap<String, Toml>, | |||

| @@ -148,20 +173,23 @@ impl Config { | |||

| Some(glob_set_builder.build().expect("Bad ignored_content in config file.")); | |||

| } | |||

| for taxonomy in config.taxonomies.iter_mut() { | |||

| if taxonomy.lang.is_empty() { | |||

| taxonomy.lang = config.default_language.clone(); | |||

| } | |||

| } | |||

| Ok(config) | |||

| } | |||

| /// Parses a config file from the given path | |||

| pub fn from_file<P: AsRef<Path>>(path: P) -> Result<Config> { | |||

| let mut content = String::new(); | |||

| let path = path.as_ref(); | |||

| let file_name = path.file_name().unwrap(); | |||

| File::open(path) | |||

| .chain_err(|| { | |||

| format!("No `{:?}` file found. Are you in the right directory?", file_name) | |||

| })? | |||

| .read_to_string(&mut content)?; | |||

| let content = read_file_with_error( | |||

| path, | |||

| &format!("No `{:?}` file found. Are you in the right directory?", file_name), | |||

| )?; | |||

| Config::parse(&content) | |||

| } | |||

| @@ -227,6 +255,16 @@ impl Config { | |||

| let theme = Theme::from_file(path)?; | |||

| self.add_theme_extra(&theme) | |||

| } | |||

| /// Is this site using i18n? | |||

| pub fn is_multilingual(&self) -> bool { | |||

| !self.languages.is_empty() | |||

| } | |||

| /// Returns the codes of all additional languages | |||

| pub fn languages_codes(&self) -> Vec<&str> { | |||

| self.languages.iter().map(|l| l.code.as_ref()).collect() | |||

| } | |||

| } | |||

| impl Default for Config { | |||

| @@ -239,6 +277,7 @@ impl Default for Config { | |||

| highlight_code: false, | |||

| highlight_theme: "base16-ocean-dark".to_string(), | |||

| default_language: "en".to_string(), | |||

| languages: Vec::new(), | |||

| generate_rss: false, | |||

| rss_limit: None, | |||

| taxonomies: Vec::new(), | |||

+ 6

- 4

components/config/src/lib.rs

View File

| @@ -1,18 +1,20 @@ | |||

| #[macro_use] | |||

| extern crate serde_derive; | |||

| extern crate toml; | |||

| #[macro_use] | |||

| extern crate errors; | |||

| extern crate chrono; | |||

| extern crate globset; | |||

| extern crate toml; | |||

| #[macro_use] | |||

| extern crate lazy_static; | |||

| extern crate syntect; | |||

| #[macro_use] | |||

| extern crate errors; | |||

| extern crate utils; | |||

| mod config; | |||

| pub mod highlighting; | |||

| mod theme; | |||

| pub use config::{Config, Taxonomy}; | |||

| pub use config::{Config, Language, Taxonomy}; | |||

| use std::path::Path; | |||

+ 8

- 12

components/config/src/theme.rs

View File

| @@ -1,11 +1,10 @@ | |||

| use std::collections::HashMap; | |||

| use std::fs::File; | |||

| use std::io::prelude::*; | |||

| use std::path::PathBuf; | |||

| use toml::Value as Toml; | |||

| use errors::{Result, ResultExt}; | |||

| use errors::Result; | |||

| use utils::fs::read_file_with_error; | |||

| /// Holds the data from a `theme.toml` file. | |||

| /// There are other fields than `extra` in it but Zola | |||

| @@ -40,15 +39,12 @@ impl Theme { | |||

| /// Parses a theme file from the given path | |||

| pub fn from_file(path: &PathBuf) -> Result<Theme> { | |||

| let mut content = String::new(); | |||

| File::open(path) | |||

| .chain_err(|| { | |||

| "No `theme.toml` file found. \ | |||

| Is the `theme` defined in your `config.toml present in the `themes` directory \ | |||

| and does it have a `theme.toml` inside?" | |||

| })? | |||

| .read_to_string(&mut content)?; | |||

| let content = read_file_with_error( | |||

| path, | |||

| "No `theme.toml` file found. \ | |||

| Is the `theme` defined in your `config.toml present in the `themes` directory \ | |||

| and does it have a `theme.toml` inside?", | |||

| )?; | |||

| Theme::parse(&content) | |||

| } | |||

| } | |||

+ 2

- 3

components/errors/Cargo.toml

View File

| @@ -4,8 +4,7 @@ version = "0.1.0" | |||

| authors = ["Vincent Prouillet <prouillet.vincent@gmail.com>"] | |||

| [dependencies] | |||

| error-chain = "0.12" | |||

| tera = "0.11" | |||

| tera = "1.0.0-alpha.3" | |||

| toml = "0.4" | |||

| image = "0.20" | |||

| image = "0.21" | |||

| syntect = "3" | |||

+ 95

- 13

components/errors/src/lib.rs

View File

| @@ -1,26 +1,108 @@ | |||

| #![allow(unused_doc_comments)] | |||

| #[macro_use] | |||

| extern crate error_chain; | |||

| extern crate image; | |||

| extern crate syntect; | |||

| extern crate tera; | |||

| extern crate toml; | |||

| error_chain! { | |||

| errors {} | |||

| use std::convert::Into; | |||

| use std::error::Error as StdError; | |||

| use std::fmt; | |||

| #[derive(Debug)] | |||

| pub enum ErrorKind { | |||

| Msg(String), | |||

| Tera(tera::Error), | |||

| Io(::std::io::Error), | |||

| Toml(toml::de::Error), | |||

| Image(image::ImageError), | |||

| Syntect(syntect::LoadingError), | |||

| } | |||

| /// The Error type | |||

| #[derive(Debug)] | |||

| pub struct Error { | |||

| /// Kind of error | |||

| pub kind: ErrorKind, | |||

| pub source: Option<Box<dyn StdError>>, | |||

| } | |||

| unsafe impl Sync for Error {} | |||

| unsafe impl Send for Error {} | |||

| impl StdError for Error { | |||

| fn source(&self) -> Option<&(dyn StdError + 'static)> { | |||

| let mut source = self.source.as_ref().map(|c| &**c); | |||

| if source.is_none() { | |||

| match self.kind { | |||

| ErrorKind::Tera(ref err) => source = err.source(), | |||

| _ => (), | |||

| }; | |||

| } | |||

| source | |||

| } | |||

| } | |||

| links { | |||

| Tera(tera::Error, tera::ErrorKind); | |||

| impl fmt::Display for Error { | |||

| fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result { | |||

| match self.kind { | |||

| ErrorKind::Msg(ref message) => write!(f, "{}", message), | |||

| ErrorKind::Tera(ref e) => write!(f, "{}", e), | |||

| ErrorKind::Io(ref e) => write!(f, "{}", e), | |||

| ErrorKind::Toml(ref e) => write!(f, "{}", e), | |||

| ErrorKind::Image(ref e) => write!(f, "{}", e), | |||

| ErrorKind::Syntect(ref e) => write!(f, "{}", e), | |||

| } | |||

| } | |||

| } | |||

| foreign_links { | |||

| Io(::std::io::Error); | |||

| Toml(toml::de::Error); | |||

| Image(image::ImageError); | |||

| Syntect(syntect::LoadingError); | |||

| impl Error { | |||

| /// Creates generic error | |||

| pub fn msg(value: impl ToString) -> Self { | |||

| Self { kind: ErrorKind::Msg(value.to_string()), source: None } | |||

| } | |||

| /// Creates generic error with a cause | |||

| pub fn chain(value: impl ToString, source: impl Into<Box<dyn StdError>>) -> Self { | |||

| Self { kind: ErrorKind::Msg(value.to_string()), source: Some(source.into()) } | |||

| } | |||

| } | |||

| impl From<&str> for Error { | |||

| fn from(e: &str) -> Self { | |||

| Self::msg(e) | |||

| } | |||

| } | |||

| impl From<String> for Error { | |||

| fn from(e: String) -> Self { | |||

| Self::msg(e) | |||

| } | |||

| } | |||

| impl From<toml::de::Error> for Error { | |||

| fn from(e: toml::de::Error) -> Self { | |||

| Self { kind: ErrorKind::Toml(e), source: None } | |||

| } | |||

| } | |||

| impl From<syntect::LoadingError> for Error { | |||

| fn from(e: syntect::LoadingError) -> Self { | |||

| Self { kind: ErrorKind::Syntect(e), source: None } | |||

| } | |||

| } | |||

| impl From<tera::Error> for Error { | |||

| fn from(e: tera::Error) -> Self { | |||

| Self { kind: ErrorKind::Tera(e), source: None } | |||

| } | |||

| } | |||

| impl From<::std::io::Error> for Error { | |||

| fn from(e: ::std::io::Error) -> Self { | |||

| Self { kind: ErrorKind::Io(e), source: None } | |||

| } | |||

| } | |||

| impl From<image::ImageError> for Error { | |||

| fn from(e: image::ImageError) -> Self { | |||

| Self { kind: ErrorKind::Image(e), source: None } | |||

| } | |||

| } | |||

| /// Convenient wrapper around std::Result. | |||

| pub type Result<T> = ::std::result::Result<T, Error>; | |||

| // So we can use bail! in all other crates | |||

| #[macro_export] | |||

+ 1

- 1

components/front_matter/Cargo.toml

View File

| @@ -4,7 +4,7 @@ version = "0.1.0" | |||

| authors = ["Vincent Prouillet <prouillet.vincent@gmail.com>"] | |||

| [dependencies] | |||

| tera = "0.11" | |||

| tera = "1.0.0-alpha.3" | |||

| chrono = "0.4" | |||

| serde = "1" | |||

| serde_derive = "1" | |||

+ 11

- 5

components/front_matter/src/lib.rs

View File

| @@ -12,7 +12,7 @@ extern crate toml; | |||

| extern crate errors; | |||

| extern crate utils; | |||

| use errors::{Result, ResultExt}; | |||

| use errors::{Error, Result}; | |||

| use regex::Regex; | |||

| use std::path::Path; | |||

| @@ -71,8 +71,11 @@ pub fn split_section_content( | |||

| content: &str, | |||

| ) -> Result<(SectionFrontMatter, String)> { | |||

| let (front_matter, content) = split_content(file_path, content)?; | |||

| let meta = SectionFrontMatter::parse(&front_matter).chain_err(|| { | |||

| format!("Error when parsing front matter of section `{}`", file_path.to_string_lossy()) | |||

| let meta = SectionFrontMatter::parse(&front_matter).map_err(|e| { | |||

| Error::chain( | |||

| format!("Error when parsing front matter of section `{}`", file_path.to_string_lossy()), | |||

| e, | |||

| ) | |||

| })?; | |||

| Ok((meta, content)) | |||

| } | |||

| @@ -81,8 +84,11 @@ pub fn split_section_content( | |||

| /// Returns a parsed `PageFrontMatter` and the rest of the content | |||

| pub fn split_page_content(file_path: &Path, content: &str) -> Result<(PageFrontMatter, String)> { | |||

| let (front_matter, content) = split_content(file_path, content)?; | |||

| let meta = PageFrontMatter::parse(&front_matter).chain_err(|| { | |||

| format!("Error when parsing front matter of page `{}`", file_path.to_string_lossy()) | |||

| let meta = PageFrontMatter::parse(&front_matter).map_err(|e| { | |||

| Error::chain( | |||

| format!("Error when parsing front matter of page `{}`", file_path.to_string_lossy()), | |||

| e, | |||

| ) | |||

| })?; | |||

| Ok((meta, content)) | |||

| } | |||

+ 2

- 2

components/imageproc/Cargo.toml

View File

| @@ -6,8 +6,8 @@ authors = ["Vojtěch Král <vojtech@kral.hk>"] | |||

| [dependencies] | |||

| lazy_static = "1" | |||

| regex = "1.0" | |||

| tera = "0.11" | |||

| image = "0.20" | |||

| tera = "1.0.0-alpha.3" | |||

| image = "0.21" | |||

| rayon = "1" | |||

| errors = { path = "../errors" } | |||

+ 98

- 32

components/imageproc/src/lib.rs

View File

| @@ -15,18 +15,19 @@ use std::hash::{Hash, Hasher}; | |||

| use std::path::{Path, PathBuf}; | |||

| use image::jpeg::JPEGEncoder; | |||

| use image::png::PNGEncoder; | |||

| use image::{FilterType, GenericImageView}; | |||

| use rayon::prelude::*; | |||

| use regex::Regex; | |||

| use errors::{Result, ResultExt}; | |||

| use errors::{Error, Result}; | |||

| use utils::fs as ufs; | |||

| static RESIZED_SUBDIR: &'static str = "processed_images"; | |||

| lazy_static! { | |||

| pub static ref RESIZED_FILENAME: Regex = | |||

| Regex::new(r#"([0-9a-f]{16})([0-9a-f]{2})[.]jpg"#).unwrap(); | |||

| Regex::new(r#"([0-9a-f]{16})([0-9a-f]{2})[.](jpg|png)"#).unwrap(); | |||

| } | |||

| /// Describes the precise kind of a resize operation | |||

| @@ -136,12 +137,78 @@ impl Hash for ResizeOp { | |||

| } | |||

| } | |||

| /// Thumbnail image format | |||

| #[derive(Debug, Clone, Copy, PartialEq, Eq)] | |||

| pub enum Format { | |||

| /// JPEG, The `u8` argument is JPEG quality (in percent). | |||

| Jpeg(u8), | |||

| /// PNG | |||

| Png, | |||

| } | |||

| impl Format { | |||

| pub fn from_args(source: &str, format: &str, quality: u8) -> Result<Format> { | |||

| use Format::*; | |||

| assert!(quality > 0 && quality <= 100, "Jpeg quality must be within the range [1; 100]"); | |||

| match format { | |||

| "auto" => match Self::is_lossy(source) { | |||

| Some(true) => Ok(Jpeg(quality)), | |||

| Some(false) => Ok(Png), | |||

| None => Err(format!("Unsupported image file: {}", source).into()), | |||

| }, | |||

| "jpeg" | "jpg" => Ok(Jpeg(quality)), | |||

| "png" => Ok(Png), | |||

| _ => Err(format!("Invalid image format: {}", format).into()), | |||

| } | |||

| } | |||

| /// Looks at file's extension and, if it's a supported image format, returns whether the format is lossless | |||

| pub fn is_lossy<P: AsRef<Path>>(p: P) -> Option<bool> { | |||

| p.as_ref() | |||

| .extension() | |||

| .and_then(|s| s.to_str()) | |||

| .map(|ext| match ext.to_lowercase().as_str() { | |||

| "jpg" | "jpeg" => Some(true), | |||

| "png" => Some(false), | |||

| "gif" => Some(false), | |||

| "bmp" => Some(false), | |||

| _ => None, | |||

| }) | |||

| .unwrap_or(None) | |||

| } | |||

| fn extension(&self) -> &str { | |||

| // Kept in sync with RESIZED_FILENAME and op_filename | |||

| use Format::*; | |||

| match *self { | |||

| Png => "png", | |||

| Jpeg(_) => "jpg", | |||

| } | |||

| } | |||

| } | |||

| impl Hash for Format { | |||

| fn hash<H: Hasher>(&self, hasher: &mut H) { | |||

| use Format::*; | |||

| let q = match *self { | |||

| Png => 0, | |||

| Jpeg(q) => q, | |||

| }; | |||

| hasher.write_u8(q); | |||

| } | |||

| } | |||

| /// Holds all data needed to perform a resize operation | |||

| #[derive(Debug, PartialEq, Eq)] | |||

| pub struct ImageOp { | |||

| source: String, | |||

| op: ResizeOp, | |||

| quality: u8, | |||

| format: Format, | |||

| /// Hash of the above parameters | |||

| hash: u64, | |||

| /// If there is a hash collision with another ImageOp, this contains a sequential ID > 1 | |||

| @@ -152,14 +219,14 @@ pub struct ImageOp { | |||

| } | |||

| impl ImageOp { | |||

| pub fn new(source: String, op: ResizeOp, quality: u8) -> ImageOp { | |||

| pub fn new(source: String, op: ResizeOp, format: Format) -> ImageOp { | |||

| let mut hasher = DefaultHasher::new(); | |||

| hasher.write(source.as_ref()); | |||

| op.hash(&mut hasher); | |||

| hasher.write_u8(quality); | |||

| format.hash(&mut hasher); | |||

| let hash = hasher.finish(); | |||

| ImageOp { source, op, quality, hash, collision_id: 0 } | |||

| ImageOp { source, op, format, hash, collision_id: 0 } | |||

| } | |||

| pub fn from_args( | |||

| @@ -167,10 +234,12 @@ impl ImageOp { | |||

| op: &str, | |||

| width: Option<u32>, | |||

| height: Option<u32>, | |||

| format: &str, | |||

| quality: u8, | |||

| ) -> Result<ImageOp> { | |||

| let op = ResizeOp::from_args(op, width, height)?; | |||

| Ok(Self::new(source, op, quality)) | |||

| let format = Format::from_args(&source, format, quality)?; | |||

| Ok(Self::new(source, op, format)) | |||

| } | |||

| fn perform(&self, content_path: &Path, target_path: &Path) -> Result<()> { | |||

| @@ -184,7 +253,7 @@ impl ImageOp { | |||

| let mut img = image::open(&src_path)?; | |||

| let (img_w, img_h) = img.dimensions(); | |||

| const RESIZE_FILTER: FilterType = FilterType::Gaussian; | |||

| const RESIZE_FILTER: FilterType = FilterType::Lanczos3; | |||

| const RATIO_EPSILLION: f32 = 0.1; | |||

| let img = match self.op { | |||

| @@ -223,9 +292,19 @@ impl ImageOp { | |||

| }; | |||

| let mut f = File::create(target_path)?; | |||

| let mut enc = JPEGEncoder::new_with_quality(&mut f, self.quality); | |||

| let (img_w, img_h) = img.dimensions(); | |||

| enc.encode(&img.raw_pixels(), img_w, img_h, img.color())?; | |||

| match self.format { | |||

| Format::Png => { | |||

| let mut enc = PNGEncoder::new(&mut f); | |||

| enc.encode(&img.raw_pixels(), img_w, img_h, img.color())?; | |||

| } | |||

| Format::Jpeg(q) => { | |||

| let mut enc = JPEGEncoder::new_with_quality(&mut f, q); | |||

| enc.encode(&img.raw_pixels(), img_w, img_h, img.color())?; | |||

| } | |||

| } | |||

| Ok(()) | |||

| } | |||

| } | |||

| @@ -323,20 +402,21 @@ impl Processor { | |||

| collision_id | |||

| } | |||

| fn op_filename(hash: u64, collision_id: u32) -> String { | |||

| fn op_filename(hash: u64, collision_id: u32, format: Format) -> String { | |||

| // Please keep this in sync with RESIZED_FILENAME | |||

| assert!(collision_id < 256, "Unexpectedly large number of collisions: {}", collision_id); | |||

| format!("{:016x}{:02x}.jpg", hash, collision_id) | |||

| format!("{:016x}{:02x}.{}", hash, collision_id, format.extension()) | |||

| } | |||

| fn op_url(&self, hash: u64, collision_id: u32) -> String { | |||

| format!("{}/{}", &self.resized_url, Self::op_filename(hash, collision_id)) | |||

| fn op_url(&self, hash: u64, collision_id: u32, format: Format) -> String { | |||

| format!("{}/{}", &self.resized_url, Self::op_filename(hash, collision_id, format)) | |||

| } | |||

| pub fn insert(&mut self, img_op: ImageOp) -> String { | |||

| let hash = img_op.hash; | |||

| let format = img_op.format; | |||

| let collision_id = self.insert_with_collisions(img_op); | |||

| self.op_url(hash, collision_id) | |||

| self.op_url(hash, collision_id, format) | |||

| } | |||

| pub fn prune(&self) -> Result<()> { | |||

| @@ -373,25 +453,11 @@ impl Processor { | |||

| self.img_ops | |||

| .par_iter() | |||

| .map(|(hash, op)| { | |||

| let target = self.resized_path.join(Self::op_filename(*hash, op.collision_id)); | |||

| let target = | |||

| self.resized_path.join(Self::op_filename(*hash, op.collision_id, op.format)); | |||

| op.perform(&self.content_path, &target) | |||

| .chain_err(|| format!("Failed to process image: {}", op.source)) | |||

| .map_err(|e| Error::chain(format!("Failed to process image: {}", op.source), e)) | |||

| }) | |||

| .collect::<Result<()>>() | |||

| } | |||

| } | |||

| /// Looks at file's extension and returns whether it's a supported image format | |||

| pub fn file_is_img<P: AsRef<Path>>(p: P) -> bool { | |||

| p.as_ref() | |||

| .extension() | |||

| .and_then(|s| s.to_str()) | |||

| .map(|ext| match ext.to_lowercase().as_str() { | |||

| "jpg" | "jpeg" => true, | |||

| "png" => true, | |||

| "gif" => true, | |||

| "bmp" => true, | |||

| _ => false, | |||

| }) | |||

| .unwrap_or(false) | |||

| } | |||

+ 1

- 1

components/library/Cargo.toml

View File

| @@ -7,7 +7,7 @@ authors = ["Vincent Prouillet <prouillet.vincent@gmail.com>"] | |||

| slotmap = "0.2" | |||

| rayon = "1" | |||

| chrono = { version = "0.4", features = ["serde"] } | |||

| tera = "0.11" | |||

| tera = "1.0.0-alpha.3" | |||

| serde = "1" | |||

| serde_derive = "1" | |||

| slug = "0.1" | |||

+ 152

- 14

components/library/src/content/file_info.rs

View File

| @@ -1,5 +1,8 @@ | |||

| use std::path::{Path, PathBuf}; | |||

| use config::Config; | |||

| use errors::Result; | |||

| /// Takes a full path to a file and returns only the components after the first `content` directory | |||

| /// Will not return the filename as last component | |||

| pub fn find_content_components<P: AsRef<Path>>(path: P) -> Vec<String> { | |||

| @@ -28,7 +31,10 @@ pub fn find_content_components<P: AsRef<Path>>(path: P) -> Vec<String> { | |||

| pub struct FileInfo { | |||

| /// The full path to the .md file | |||

| pub path: PathBuf, | |||

| /// The on-disk filename, will differ from the `name` when there is a language code in it | |||

| pub filename: String, | |||

| /// The name of the .md file without the extension, always `_index` for sections | |||

| /// Doesn't contain the language if there was one in the filename | |||

| pub name: String, | |||

| /// The .md path, starting from the content directory, with `/` slashes | |||

| pub relative: String, | |||

| @@ -40,14 +46,19 @@ pub struct FileInfo { | |||

| /// For example a file at content/kb/solutions/blabla.md will have 2 components: | |||

| /// `kb` and `solutions` | |||

| pub components: Vec<String>, | |||

| /// This is `parent` + `name`, used to find content referring to the same content but in | |||

| /// various languages. | |||

| pub canonical: PathBuf, | |||

| } | |||

| impl FileInfo { | |||

| pub fn new_page(path: &Path) -> FileInfo { | |||

| pub fn new_page(path: &Path, base_path: &PathBuf) -> FileInfo { | |||

| let file_path = path.to_path_buf(); | |||

| let mut parent = file_path.parent().unwrap().to_path_buf(); | |||

| let mut parent = file_path.parent().expect("Get parent of page").to_path_buf(); | |||

| let name = path.file_stem().unwrap().to_string_lossy().to_string(); | |||

| let mut components = find_content_components(&file_path); | |||

| let mut components = find_content_components( | |||

| &file_path.strip_prefix(base_path).expect("Strip base path prefix for page"), | |||

| ); | |||

| let relative = if !components.is_empty() { | |||

| format!("{}/{}.md", components.join("/"), name) | |||

| } else { | |||

| @@ -55,16 +66,20 @@ impl FileInfo { | |||

| }; | |||

| // If we have a folder with an asset, don't consider it as a component | |||

| if !components.is_empty() && name == "index" { | |||

| // Splitting on `.` as we might have a language so it isn't *only* index but also index.fr | |||

| // etc | |||

| if !components.is_empty() && name.split('.').collect::<Vec<_>>()[0] == "index" { | |||

| components.pop(); | |||

| // also set parent_path to grandparent instead | |||

| parent = parent.parent().unwrap().to_path_buf(); | |||

| } | |||

| FileInfo { | |||

| filename: file_path.file_name().unwrap().to_string_lossy().to_string(), | |||

| path: file_path, | |||

| // We don't care about grand parent for pages | |||

| grand_parent: None, | |||

| canonical: parent.join(&name), | |||

| parent, | |||

| name, | |||

| components, | |||

| @@ -72,26 +87,61 @@ impl FileInfo { | |||

| } | |||

| } | |||

| pub fn new_section(path: &Path) -> FileInfo { | |||

| let parent = path.parent().unwrap().to_path_buf(); | |||

| let components = find_content_components(path); | |||

| let relative = if components.is_empty() { | |||

| // the index one | |||

| "_index.md".to_string() | |||

| pub fn new_section(path: &Path, base_path: &PathBuf) -> FileInfo { | |||

| let file_path = path.to_path_buf(); | |||

| let parent = path.parent().expect("Get parent of section").to_path_buf(); | |||

| let name = path.file_stem().unwrap().to_string_lossy().to_string(); | |||

| let components = find_content_components( | |||

| &file_path.strip_prefix(base_path).expect("Strip base path prefix for section"), | |||

| ); | |||

| let relative = if !components.is_empty() { | |||

| format!("{}/{}.md", components.join("/"), name) | |||

| } else { | |||

| format!("{}/_index.md", components.join("/")) | |||

| format!("{}.md", name) | |||

| }; | |||

| let grand_parent = parent.parent().map(|p| p.to_path_buf()); | |||

| FileInfo { | |||

| path: path.to_path_buf(), | |||

| filename: file_path.file_name().unwrap().to_string_lossy().to_string(), | |||

| path: file_path, | |||

| canonical: parent.join(&name), | |||

| parent, | |||

| grand_parent, | |||

| name: "_index".to_string(), | |||

| name, | |||

| components, | |||

| relative, | |||

| } | |||

| } | |||

| /// Look for a language in the filename. | |||

| /// If a language has been found, update the name of the file in this struct to | |||

| /// remove it and return the language code | |||

| pub fn find_language(&mut self, config: &Config) -> Result<String> { | |||

| // No languages? Nothing to do | |||

| if !config.is_multilingual() { | |||

| return Ok(config.default_language.clone()); | |||

| } | |||

| if !self.name.contains('.') { | |||

| return Ok(config.default_language.clone()); | |||

| } | |||

| // Go with the assumption that no one is using `.` in filenames when using i18n | |||

| // We can document that | |||

| let mut parts: Vec<String> = self.name.splitn(2, '.').map(|s| s.to_string()).collect(); | |||

| // The language code is not present in the config: typo or the user forgot to add it to the | |||

| // config | |||

| if !config.languages_codes().contains(&parts[1].as_ref()) { | |||

| bail!("File {:?} has a language code of {} which isn't present in the config.toml `languages`", self.path, parts[1]); | |||

| } | |||

| self.name = parts.swap_remove(0); | |||

| self.canonical = self.parent.join(&self.name); | |||

| let lang = parts.swap_remove(0); | |||

| Ok(lang) | |||

| } | |||

| } | |||

| #[doc(hidden)] | |||

| @@ -101,16 +151,22 @@ impl Default for FileInfo { | |||

| path: PathBuf::new(), | |||

| parent: PathBuf::new(), | |||

| grand_parent: None, | |||

| filename: String::new(), | |||

| name: String::new(), | |||

| components: vec![], | |||

| relative: String::new(), | |||

| canonical: PathBuf::new(), | |||

| } | |||

| } | |||

| } | |||

| #[cfg(test)] | |||

| mod tests { | |||

| use super::find_content_components; | |||

| use std::path::{Path, PathBuf}; | |||

| use config::{Config, Language}; | |||

| use super::{find_content_components, FileInfo}; | |||

| #[test] | |||

| fn can_find_content_components() { | |||

| @@ -118,4 +174,86 @@ mod tests { | |||

| find_content_components("/home/vincent/code/site/content/posts/tutorials/python.md"); | |||

| assert_eq!(res, ["posts".to_string(), "tutorials".to_string()]); | |||

| } | |||

| #[test] | |||

| fn can_find_components_in_page_with_assets() { | |||

| let file = FileInfo::new_page( | |||

| &Path::new("/home/vincent/code/site/content/posts/tutorials/python/index.md"), | |||

| &PathBuf::new(), | |||

| ); | |||

| assert_eq!(file.components, ["posts".to_string(), "tutorials".to_string()]); | |||

| } | |||

| #[test] | |||

| fn doesnt_fail_with_multiple_content_directories() { | |||

| let file = FileInfo::new_page( | |||

| &Path::new("/home/vincent/code/content/site/content/posts/tutorials/python/index.md"), | |||

| &PathBuf::from("/home/vincent/code/content/site"), | |||

| ); | |||

| assert_eq!(file.components, ["posts".to_string(), "tutorials".to_string()]); | |||

| } | |||

| #[test] | |||

| fn can_find_valid_language_in_page() { | |||

| let mut config = Config::default(); | |||

| config.languages.push(Language { code: String::from("fr"), rss: false }); | |||

| let mut file = FileInfo::new_page( | |||

| &Path::new("/home/vincent/code/site/content/posts/tutorials/python.fr.md"), | |||

| &PathBuf::new(), | |||

| ); | |||

| let res = file.find_language(&config); | |||

| assert!(res.is_ok()); | |||

| assert_eq!(res.unwrap(), "fr"); | |||

| } | |||

| #[test] | |||

| fn can_find_valid_language_in_page_with_assets() { | |||

| let mut config = Config::default(); | |||

| config.languages.push(Language { code: String::from("fr"), rss: false }); | |||

| let mut file = FileInfo::new_page( | |||

| &Path::new("/home/vincent/code/site/content/posts/tutorials/python/index.fr.md"), | |||

| &PathBuf::new(), | |||

| ); | |||

| assert_eq!(file.components, ["posts".to_string(), "tutorials".to_string()]); | |||

| let res = file.find_language(&config); | |||

| assert!(res.is_ok()); | |||

| assert_eq!(res.unwrap(), "fr"); | |||

| } | |||

| #[test] | |||

| fn do_nothing_on_unknown_language_in_page_with_i18n_off() { | |||

| let config = Config::default(); | |||

| let mut file = FileInfo::new_page( | |||

| &Path::new("/home/vincent/code/site/content/posts/tutorials/python.fr.md"), | |||

| &PathBuf::new(), | |||

| ); | |||

| let res = file.find_language(&config); | |||

| assert!(res.is_ok()); | |||

| assert_eq!(res.unwrap(), config.default_language); | |||

| } | |||

| #[test] | |||

| fn errors_on_unknown_language_in_page_with_i18n_on() { | |||

| let mut config = Config::default(); | |||

| config.languages.push(Language { code: String::from("it"), rss: false }); | |||

| let mut file = FileInfo::new_page( | |||

| &Path::new("/home/vincent/code/site/content/posts/tutorials/python.fr.md"), | |||

| &PathBuf::new(), | |||

| ); | |||

| let res = file.find_language(&config); | |||

| assert!(res.is_err()); | |||

| } | |||

| #[test] | |||

| fn can_find_valid_language_in_section() { | |||

| let mut config = Config::default(); | |||

| config.languages.push(Language { code: String::from("fr"), rss: false }); | |||

| let mut file = FileInfo::new_section( | |||

| &Path::new("/home/vincent/code/site/content/posts/tutorials/_index.fr.md"), | |||

| &PathBuf::new(), | |||

| ); | |||

| let res = file.find_language(&config); | |||

| assert!(res.is_ok()); | |||

| assert_eq!(res.unwrap(), "fr"); | |||

| } | |||

| } | |||

+ 182

- 31

components/library/src/content/page.rs

View File

| @@ -8,7 +8,7 @@ use slug::slugify; | |||

| use tera::{Context as TeraContext, Tera}; | |||

| use config::Config; | |||

| use errors::{Result, ResultExt}; | |||

| use errors::{Error, Result}; | |||

| use front_matter::{split_page_content, InsertAnchor, PageFrontMatter}; | |||

| use library::Library; | |||

| use rendering::{render_content, Header, RenderContext}; | |||

| @@ -71,14 +71,19 @@ pub struct Page { | |||

| /// How long would it take to read the raw content. | |||

| /// See `get_reading_analytics` on how it is calculated | |||

| pub reading_time: Option<usize>, | |||

| /// The language of that page. Equal to the default lang if the user doesn't setup `languages` in config. | |||

| /// Corresponds to the lang in the {slug}.{lang}.md file scheme | |||

| pub lang: String, | |||

| /// Contains all the translated version of that page | |||

| pub translations: Vec<Key>, | |||

| } | |||

| impl Page { | |||

| pub fn new<P: AsRef<Path>>(file_path: P, meta: PageFrontMatter) -> Page { | |||

| pub fn new<P: AsRef<Path>>(file_path: P, meta: PageFrontMatter, base_path: &PathBuf) -> Page { | |||

| let file_path = file_path.as_ref(); | |||

| Page { | |||

| file: FileInfo::new_page(file_path), | |||

| file: FileInfo::new_page(file_path, base_path), | |||

| meta, | |||

| ancestors: vec![], | |||

| raw_content: "".to_string(), | |||

| @@ -97,6 +102,8 @@ impl Page { | |||

| toc: vec![], | |||

| word_count: None, | |||

| reading_time: None, | |||

| lang: String::new(), | |||

| translations: Vec::new(), | |||

| } | |||

| } | |||

| @@ -107,9 +114,16 @@ impl Page { | |||

| /// Parse a page given the content of the .md file | |||

| /// Files without front matter or with invalid front matter are considered | |||

| /// erroneous | |||

| pub fn parse(file_path: &Path, content: &str, config: &Config) -> Result<Page> { | |||

| pub fn parse( | |||

| file_path: &Path, | |||

| content: &str, | |||

| config: &Config, | |||

| base_path: &PathBuf, | |||

| ) -> Result<Page> { | |||

| let (meta, content) = split_page_content(file_path, content)?; | |||

| let mut page = Page::new(file_path, meta); | |||

| let mut page = Page::new(file_path, meta, base_path); | |||

| page.lang = page.file.find_language(config)?; | |||

| page.raw_content = content; | |||

| let (word_count, reading_time) = get_reading_analytics(&page.raw_content); | |||

| @@ -117,7 +131,16 @@ impl Page { | |||

| page.reading_time = Some(reading_time); | |||

| let mut slug_from_dated_filename = None; | |||

| if let Some(ref caps) = RFC3339_DATE.captures(&page.file.name.replace(".md", "")) { | |||

| let file_path = if page.file.name == "index" { | |||

| if let Some(parent) = page.file.path.parent() { | |||

| parent.file_name().unwrap().to_str().unwrap().to_string() | |||

| } else { | |||

| page.file.name.replace(".md", "") | |||

| } | |||

| } else { | |||

| page.file.name.replace(".md", "") | |||

| }; | |||

| if let Some(ref caps) = RFC3339_DATE.captures(&file_path) { | |||

| slug_from_dated_filename = Some(caps.name("slug").unwrap().as_str().to_string()); | |||

| if page.meta.date.is_none() { | |||

| page.meta.date = Some(caps.name("datetime").unwrap().as_str().to_string()); | |||

| @@ -130,7 +153,11 @@ impl Page { | |||

| slug.trim().to_string() | |||

| } else if page.file.name == "index" { | |||

| if let Some(parent) = page.file.path.parent() { | |||

| slugify(parent.file_name().unwrap().to_str().unwrap()) | |||

| if let Some(slug) = slug_from_dated_filename { | |||

| slugify(&slug) | |||

| } else { | |||

| slugify(parent.file_name().unwrap().to_str().unwrap()) | |||

| } | |||

| } else { | |||

| slugify(&page.file.name) | |||

| } | |||

| @@ -144,13 +171,19 @@ impl Page { | |||

| }; | |||

| if let Some(ref p) = page.meta.path { | |||

| page.path = p.trim().trim_left_matches('/').to_string(); | |||

| page.path = p.trim().trim_start_matches('/').to_string(); | |||

| } else { | |||

| page.path = if page.file.components.is_empty() { | |||

| let mut path = if page.file.components.is_empty() { | |||

| page.slug.clone() | |||

| } else { | |||

| format!("{}/{}", page.file.components.join("/"), page.slug) | |||

| }; | |||

| if page.lang != config.default_language { | |||

| path = format!("{}/{}", page.lang, path); | |||

| } | |||

| page.path = path; | |||

| } | |||

| if !page.path.ends_with('/') { | |||

| page.path = format!("{}/", page.path); | |||

| @@ -168,10 +201,14 @@ impl Page { | |||

| } | |||

| /// Read and parse a .md file into a Page struct | |||

| pub fn from_file<P: AsRef<Path>>(path: P, config: &Config) -> Result<Page> { | |||

| pub fn from_file<P: AsRef<Path>>( | |||

| path: P, | |||

| config: &Config, | |||

| base_path: &PathBuf, | |||

| ) -> Result<Page> { | |||

| let path = path.as_ref(); | |||

| let content = read_file(path)?; | |||

| let mut page = Page::parse(path, &content, config)?; | |||

| let mut page = Page::parse(path, &content, config, base_path)?; | |||

| if page.file.name == "index" { | |||

| let parent_dir = path.parent().unwrap(); | |||

| @@ -218,8 +255,9 @@ impl Page { | |||

| context.tera_context.insert("page", &SerializingPage::from_page_basic(self, None)); | |||

| let res = render_content(&self.raw_content, &context) | |||

| .chain_err(|| format!("Failed to render content of {}", self.file.path.display()))?; | |||

| let res = render_content(&self.raw_content, &context).map_err(|e| { | |||

| Error::chain(format!("Failed to render content of {}", self.file.path.display()), e) | |||

| })?; | |||

| self.summary = res.summary_len.map(|l| res.body[0..l].to_owned()); | |||

| self.content = res.body; | |||

| @@ -240,9 +278,12 @@ impl Page { | |||

| context.insert("current_url", &self.permalink); | |||

| context.insert("current_path", &self.path); | |||

| context.insert("page", &self.to_serialized(library)); | |||

| context.insert("lang", &self.lang); | |||

| context.insert("toc", &self.toc); | |||

| render_template(&tpl_name, tera, &context, &config.theme) | |||

| .chain_err(|| format!("Failed to render page '{}'", self.file.path.display())) | |||

| render_template(&tpl_name, tera, context, &config.theme).map_err(|e| { | |||

| Error::chain(format!("Failed to render page '{}'", self.file.path.display()), e) | |||

| }) | |||

| } | |||

| /// Creates a vectors of asset URLs. | |||

| @@ -286,6 +327,8 @@ impl Default for Page { | |||

| toc: vec![], | |||

| word_count: None, | |||

| reading_time: None, | |||

| lang: String::new(), | |||

| translations: Vec::new(), | |||

| } | |||

| } | |||

| } | |||

| @@ -295,14 +338,14 @@ mod tests { | |||

| use std::collections::HashMap; | |||

| use std::fs::{create_dir, File}; | |||

| use std::io::Write; | |||

| use std::path::Path; | |||

| use std::path::{Path, PathBuf}; | |||

| use globset::{Glob, GlobSetBuilder}; | |||

| use tempfile::tempdir; | |||

| use tera::Tera; | |||

| use super::Page; | |||

| use config::Config; | |||

| use config::{Config, Language}; | |||

| use front_matter::InsertAnchor; | |||

| #[test] | |||

| @@ -314,7 +357,7 @@ description = "hey there" | |||

| slug = "hello-world" | |||

| +++ | |||

| Hello world"#; | |||

| let res = Page::parse(Path::new("post.md"), content, &Config::default()); | |||

| let res = Page::parse(Path::new("post.md"), content, &Config::default(), &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let mut page = res.unwrap(); | |||

| page.render_markdown( | |||

| @@ -340,7 +383,8 @@ Hello world"#; | |||

| Hello world"#; | |||

| let mut conf = Config::default(); | |||

| conf.base_url = "http://hello.com/".to_string(); | |||

| let res = Page::parse(Path::new("content/posts/intro/start.md"), content, &conf); | |||

| let res = | |||

| Page::parse(Path::new("content/posts/intro/start.md"), content, &conf, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.path, "posts/intro/hello-world/"); | |||

| @@ -356,7 +400,7 @@ Hello world"#; | |||

| +++ | |||

| Hello world"#; | |||

| let config = Config::default(); | |||

| let res = Page::parse(Path::new("start.md"), content, &config); | |||

| let res = Page::parse(Path::new("start.md"), content, &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.path, "hello-world/"); | |||

| @@ -372,7 +416,12 @@ Hello world"#; | |||

| +++ | |||

| Hello world"#; | |||

| let config = Config::default(); | |||

| let res = Page::parse(Path::new("content/posts/intro/start.md"), content, &config); | |||

| let res = Page::parse( | |||

| Path::new("content/posts/intro/start.md"), | |||

| content, | |||

| &config, | |||

| &PathBuf::new(), | |||

| ); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.path, "hello-world/"); | |||

| @@ -388,7 +437,12 @@ Hello world"#; | |||

| +++ | |||

| Hello world"#; | |||

| let config = Config::default(); | |||

| let res = Page::parse(Path::new("content/posts/intro/start.md"), content, &config); | |||

| let res = Page::parse( | |||

| Path::new("content/posts/intro/start.md"), | |||

| content, | |||

| &config, | |||

| &PathBuf::new(), | |||

| ); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.path, "hello-world/"); | |||

| @@ -404,14 +458,15 @@ Hello world"#; | |||

| slug = "hello-world" | |||

| +++ | |||

| Hello world"#; | |||

| let res = Page::parse(Path::new("start.md"), content, &Config::default()); | |||

| let res = Page::parse(Path::new("start.md"), content, &Config::default(), &PathBuf::new()); | |||

| assert!(res.is_err()); | |||

| } | |||

| #[test] | |||

| fn can_make_slug_from_non_slug_filename() { | |||

| let config = Config::default(); | |||

| let res = Page::parse(Path::new(" file with space.md"), "+++\n+++", &config); | |||

| let res = | |||

| Page::parse(Path::new(" file with space.md"), "+++\n+++", &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.slug, "file-with-space"); | |||

| @@ -427,7 +482,7 @@ Hello world"#; | |||

| Hello world | |||

| <!-- more -->"# | |||

| .to_string(); | |||

| let res = Page::parse(Path::new("hello.md"), &content, &config); | |||

| let res = Page::parse(Path::new("hello.md"), &content, &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let mut page = res.unwrap(); | |||

| page.render_markdown(&HashMap::default(), &Tera::default(), &config, InsertAnchor::None) | |||

| @@ -449,7 +504,11 @@ Hello world | |||

| File::create(nested_path.join("graph.jpg")).unwrap(); | |||

| File::create(nested_path.join("fail.png")).unwrap(); | |||

| let res = Page::from_file(nested_path.join("index.md").as_path(), &Config::default()); | |||

| let res = Page::from_file( | |||

| nested_path.join("index.md").as_path(), | |||

| &Config::default(), | |||

| &PathBuf::new(), | |||

| ); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.file.parent, path.join("content").join("posts")); | |||

| @@ -472,7 +531,11 @@ Hello world | |||

| File::create(nested_path.join("graph.jpg")).unwrap(); | |||

| File::create(nested_path.join("fail.png")).unwrap(); | |||

| let res = Page::from_file(nested_path.join("index.md").as_path(), &Config::default()); | |||

| let res = Page::from_file( | |||

| nested_path.join("index.md").as_path(), | |||

| &Config::default(), | |||

| &PathBuf::new(), | |||

| ); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.file.parent, path.join("content").join("posts")); | |||

| @@ -481,6 +544,35 @@ Hello world | |||

| assert_eq!(page.permalink, "http://a-website.com/posts/hey/"); | |||

| } | |||

| // https://github.com/getzola/zola/issues/607 | |||

| #[test] | |||

| fn page_with_assets_and_date_in_folder_name() { | |||

| let tmp_dir = tempdir().expect("create temp dir"); | |||

| let path = tmp_dir.path(); | |||

| create_dir(&path.join("content")).expect("create content temp dir"); | |||

| create_dir(&path.join("content").join("posts")).expect("create posts temp dir"); | |||

| let nested_path = path.join("content").join("posts").join("2013-06-02_with-assets"); | |||

| create_dir(&nested_path).expect("create nested temp dir"); | |||

| let mut f = File::create(nested_path.join("index.md")).unwrap(); | |||

| f.write_all(b"+++\n\n+++\n").unwrap(); | |||

| File::create(nested_path.join("example.js")).unwrap(); | |||

| File::create(nested_path.join("graph.jpg")).unwrap(); | |||

| File::create(nested_path.join("fail.png")).unwrap(); | |||

| let res = Page::from_file( | |||

| nested_path.join("index.md").as_path(), | |||

| &Config::default(), | |||

| &PathBuf::new(), | |||

| ); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.file.parent, path.join("content").join("posts")); | |||

| assert_eq!(page.slug, "with-assets"); | |||

| assert_eq!(page.meta.date, Some("2013-06-02".to_string())); | |||

| assert_eq!(page.assets.len(), 3); | |||

| assert_eq!(page.permalink, "http://a-website.com/posts/with-assets/"); | |||

| } | |||

| #[test] | |||

| fn page_with_ignored_assets_filters_out_correct_files() { | |||

| let tmp_dir = tempdir().expect("create temp dir"); | |||

| @@ -500,7 +592,7 @@ Hello world | |||

| let mut config = Config::default(); | |||

| config.ignored_content_globset = Some(gsb.build().unwrap()); | |||

| let res = Page::from_file(nested_path.join("index.md").as_path(), &config); | |||

| let res = Page::from_file(nested_path.join("index.md").as_path(), &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| @@ -517,7 +609,7 @@ Hello world | |||

| Hello world | |||

| <!-- more -->"# | |||

| .to_string(); | |||

| let res = Page::parse(Path::new("2018-10-08_hello.md"), &content, &config); | |||

| let res = Page::parse(Path::new("2018-10-08_hello.md"), &content, &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| @@ -534,7 +626,12 @@ Hello world | |||

| Hello world | |||

| <!-- more -->"# | |||

| .to_string(); | |||

| let res = Page::parse(Path::new("2018-10-02T15:00:00Z-hello.md"), &content, &config); | |||

| let res = Page::parse( | |||

| Path::new("2018-10-02T15:00:00Z-hello.md"), | |||

| &content, | |||

| &config, | |||

| &PathBuf::new(), | |||

| ); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| @@ -552,11 +649,65 @@ date = 2018-09-09 | |||

| Hello world | |||

| <!-- more -->"# | |||

| .to_string(); | |||

| let res = Page::parse(Path::new("2018-10-08_hello.md"), &content, &config); | |||

| let res = Page::parse(Path::new("2018-10-08_hello.md"), &content, &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.meta.date, Some("2018-09-09".to_string())); | |||

| assert_eq!(page.slug, "hello"); | |||

| } | |||

| #[test] | |||

| fn can_specify_language_in_filename() { | |||

| let mut config = Config::default(); | |||

| config.languages.push(Language { code: String::from("fr"), rss: false }); | |||

| let content = r#" | |||

| +++ | |||

| +++ | |||

| Bonjour le monde"# | |||

| .to_string(); | |||

| let res = Page::parse(Path::new("hello.fr.md"), &content, &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.lang, "fr".to_string()); | |||

| assert_eq!(page.slug, "hello"); | |||

| assert_eq!(page.permalink, "http://a-website.com/fr/hello/"); | |||

| } | |||

| #[test] | |||

| fn can_specify_language_in_filename_with_date() { | |||

| let mut config = Config::default(); | |||

| config.languages.push(Language { code: String::from("fr"), rss: false }); | |||

| let content = r#" | |||

| +++ | |||

| +++ | |||

| Bonjour le monde"# | |||

| .to_string(); | |||

| let res = | |||

| Page::parse(Path::new("2018-10-08_hello.fr.md"), &content, &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.meta.date, Some("2018-10-08".to_string())); | |||

| assert_eq!(page.lang, "fr".to_string()); | |||

| assert_eq!(page.slug, "hello"); | |||

| assert_eq!(page.permalink, "http://a-website.com/fr/hello/"); | |||

| } | |||

| #[test] | |||

| fn i18n_frontmatter_path_overrides_default_permalink() { | |||

| let mut config = Config::default(); | |||

| config.languages.push(Language { code: String::from("fr"), rss: false }); | |||

| let content = r#" | |||

| +++ | |||

| path = "bonjour" | |||

| +++ | |||

| Bonjour le monde"# | |||

| .to_string(); | |||

| let res = Page::parse(Path::new("hello.fr.md"), &content, &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.lang, "fr".to_string()); | |||

| assert_eq!(page.slug, "hello"); | |||

| assert_eq!(page.permalink, "http://a-website.com/bonjour/"); | |||

| } | |||

| } | |||

+ 92

- 15

components/library/src/content/section.rs

View File

| @@ -5,7 +5,7 @@ use slotmap::Key; | |||

| use tera::{Context as TeraContext, Tera}; | |||

| use config::Config; | |||

| use errors::{Result, ResultExt}; | |||

| use errors::{Error, Result}; | |||

| use front_matter::{split_section_content, SectionFrontMatter}; | |||

| use rendering::{render_content, Header, RenderContext}; | |||

| use utils::fs::{find_related_assets, read_file}; | |||

| @@ -51,14 +51,23 @@ pub struct Section { | |||

| /// How long would it take to read the raw content. | |||

| /// See `get_reading_analytics` on how it is calculated | |||

| pub reading_time: Option<usize>, | |||

| /// The language of that section. Equal to the default lang if the user doesn't setup `languages` in config. | |||

| /// Corresponds to the lang in the _index.{lang}.md file scheme | |||

| pub lang: String, | |||

| /// Contains all the translated version of that section | |||

| pub translations: Vec<Key>, | |||

| } | |||

| impl Section { | |||

| pub fn new<P: AsRef<Path>>(file_path: P, meta: SectionFrontMatter) -> Section { | |||

| pub fn new<P: AsRef<Path>>( | |||

| file_path: P, | |||

| meta: SectionFrontMatter, | |||

| base_path: &PathBuf, | |||

| ) -> Section { | |||

| let file_path = file_path.as_ref(); | |||

| Section { | |||

| file: FileInfo::new_section(file_path), | |||

| file: FileInfo::new_section(file_path, base_path), | |||

| meta, | |||

| ancestors: vec![], | |||

| path: "".to_string(), | |||

| @@ -74,17 +83,30 @@ impl Section { | |||

| toc: vec![], | |||

| word_count: None, | |||

| reading_time: None, | |||

| lang: String::new(), | |||

| translations: Vec::new(), | |||

| } | |||

| } | |||

| pub fn parse(file_path: &Path, content: &str, config: &Config) -> Result<Section> { | |||

| pub fn parse( | |||

| file_path: &Path, | |||

| content: &str, | |||

| config: &Config, | |||

| base_path: &PathBuf, | |||

| ) -> Result<Section> { | |||

| let (meta, content) = split_section_content(file_path, content)?; | |||

| let mut section = Section::new(file_path, meta); | |||

| let mut section = Section::new(file_path, meta, base_path); | |||

| section.lang = section.file.find_language(config)?; | |||

| section.raw_content = content; | |||

| let (word_count, reading_time) = get_reading_analytics(§ion.raw_content); | |||

| section.word_count = Some(word_count); | |||

| section.reading_time = Some(reading_time); | |||

| section.path = format!("{}/", section.file.components.join("/")); | |||

| let path = section.file.components.join("/"); | |||

| if section.lang != config.default_language { | |||

| section.path = format!("{}/{}", section.lang, path); | |||

| } else { | |||

| section.path = format!("{}/", path); | |||

| } | |||

| section.components = section | |||

| .path | |||

| .split('/') | |||

| @@ -96,10 +118,14 @@ impl Section { | |||

| } | |||

| /// Read and parse a .md file into a Page struct | |||

| pub fn from_file<P: AsRef<Path>>(path: P, config: &Config) -> Result<Section> { | |||

| pub fn from_file<P: AsRef<Path>>( | |||

| path: P, | |||

| config: &Config, | |||

| base_path: &PathBuf, | |||

| ) -> Result<Section> { | |||

| let path = path.as_ref(); | |||

| let content = read_file(path)?; | |||

| let mut section = Section::parse(path, &content, config)?; | |||

| let mut section = Section::parse(path, &content, config, base_path)?; | |||

| let parent_dir = path.parent().unwrap(); | |||

| let assets = find_related_assets(parent_dir); | |||

| @@ -158,8 +184,9 @@ impl Section { | |||

| context.tera_context.insert("section", &SerializingSection::from_section_basic(self, None)); | |||

| let res = render_content(&self.raw_content, &context) | |||

| .chain_err(|| format!("Failed to render content of {}", self.file.path.display()))?; | |||

| let res = render_content(&self.raw_content, &context).map_err(|e| { | |||

| Error::chain(format!("Failed to render content of {}", self.file.path.display()), e) | |||

| })?; | |||

| self.content = res.body; | |||

| self.toc = res.toc; | |||

| Ok(()) | |||

| @@ -174,9 +201,12 @@ impl Section { | |||

| context.insert("current_url", &self.permalink); | |||

| context.insert("current_path", &self.path); | |||

| context.insert("section", &self.to_serialized(library)); | |||

| context.insert("lang", &self.lang); | |||

| context.insert("toc", &self.toc); | |||

| render_template(tpl_name, tera, &context, &config.theme) | |||

| .chain_err(|| format!("Failed to render section '{}'", self.file.path.display())) | |||

| render_template(tpl_name, tera, context, &config.theme).map_err(|e| { | |||

| Error::chain(format!("Failed to render section '{}'", self.file.path.display()), e) | |||

| }) | |||

| } | |||

| /// Is this the index section? | |||

| @@ -223,6 +253,8 @@ impl Default for Section { | |||

| toc: vec![], | |||

| reading_time: None, | |||

| word_count: None, | |||

| lang: String::new(), | |||

| translations: Vec::new(), | |||

| } | |||

| } | |||

| } | |||

| @@ -231,12 +263,13 @@ impl Default for Section { | |||

| mod tests { | |||

| use std::fs::{create_dir, File}; | |||

| use std::io::Write; | |||

| use std::path::{Path, PathBuf}; | |||

| use globset::{Glob, GlobSetBuilder}; | |||

| use tempfile::tempdir; | |||

| use super::Section; | |||

| use config::Config; | |||

| use config::{Config, Language}; | |||

| #[test] | |||

| fn section_with_assets_gets_right_info() { | |||

| @@ -252,7 +285,11 @@ mod tests { | |||

| File::create(nested_path.join("graph.jpg")).unwrap(); | |||

| File::create(nested_path.join("fail.png")).unwrap(); | |||

| let res = Section::from_file(nested_path.join("_index.md").as_path(), &Config::default()); | |||

| let res = Section::from_file( | |||

| nested_path.join("_index.md").as_path(), | |||

| &Config::default(), | |||

| &PathBuf::new(), | |||

| ); | |||

| assert!(res.is_ok()); | |||

| let section = res.unwrap(); | |||

| assert_eq!(section.assets.len(), 3); | |||

| @@ -278,11 +315,51 @@ mod tests { | |||

| let mut config = Config::default(); | |||

| config.ignored_content_globset = Some(gsb.build().unwrap()); | |||

| let res = Section::from_file(nested_path.join("_index.md").as_path(), &config); | |||

| let res = | |||

| Section::from_file(nested_path.join("_index.md").as_path(), &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let page = res.unwrap(); | |||

| assert_eq!(page.assets.len(), 1); | |||

| assert_eq!(page.assets[0].file_name().unwrap().to_str(), Some("graph.jpg")); | |||

| } | |||

| #[test] | |||

| fn can_specify_language_in_filename() { | |||

| let mut config = Config::default(); | |||

| config.languages.push(Language { code: String::from("fr"), rss: false }); | |||

| let content = r#" | |||

| +++ | |||

| +++ | |||

| Bonjour le monde"# | |||

| .to_string(); | |||

| let res = Section::parse( | |||

| Path::new("content/hello/nested/_index.fr.md"), | |||

| &content, | |||

| &config, | |||

| &PathBuf::new(), | |||

| ); | |||

| assert!(res.is_ok()); | |||

| let section = res.unwrap(); | |||

| assert_eq!(section.lang, "fr".to_string()); | |||

| assert_eq!(section.permalink, "http://a-website.com/fr/hello/nested/"); | |||

| } | |||

| // https://zola.discourse.group/t/rfc-i18n/13/17?u=keats | |||

| #[test] | |||

| fn can_make_links_to_translated_sections_without_double_trailing_slash() { | |||

| let mut config = Config::default(); | |||

| config.languages.push(Language { code: String::from("fr"), rss: false }); | |||

| let content = r#" | |||

| +++ | |||

| +++ | |||

| Bonjour le monde"# | |||

| .to_string(); | |||

| let res = | |||

| Section::parse(Path::new("content/_index.fr.md"), &content, &config, &PathBuf::new()); | |||

| assert!(res.is_ok()); | |||

| let section = res.unwrap(); | |||

| assert_eq!(section.lang, "fr".to_string()); | |||

| assert_eq!(section.permalink, "http://a-website.com/fr/"); | |||

| } | |||

| } | |||

+ 68

- 8

components/library/src/content/ser.rs

View File

| @@ -5,7 +5,46 @@ use tera::{Map, Value}; | |||

| use content::{Page, Section}; | |||

| use library::Library; | |||

| use rendering::Header; | |||

| #[derive(Clone, Debug, PartialEq, Serialize)] | |||

| pub struct TranslatedContent<'a> { | |||

| lang: &'a str, | |||

| permalink: &'a str, | |||

| title: &'a Option<String>, | |||

| } | |||

| impl<'a> TranslatedContent<'a> { | |||

| // copypaste eh, not worth creating an enum imo | |||

| pub fn find_all_sections(section: &'a Section, library: &'a Library) -> Vec<Self> { | |||

| let mut translations = vec![]; | |||

| for key in §ion.translations { | |||

| let other = library.get_section_by_key(*key); | |||

| translations.push(TranslatedContent { | |||

| lang: &other.lang, | |||

| permalink: &other.permalink, | |||

| title: &other.meta.title, | |||

| }); | |||

| } | |||

| translations | |||

| } | |||

| pub fn find_all_pages(page: &'a Page, library: &'a Library) -> Vec<Self> { | |||

| let mut translations = vec![]; | |||

| for key in &page.translations { | |||

| let other = library.get_page_by_key(*key); | |||

| translations.push(TranslatedContent { | |||

| lang: &other.lang, | |||

| permalink: &other.permalink, | |||

| title: &other.meta.title, | |||

| }); | |||

| } | |||

| translations | |||

| } | |||

| } | |||

| #[derive(Clone, Debug, PartialEq, Serialize)] | |||

| pub struct SerializingPage<'a> { | |||

| @@ -27,13 +66,14 @@ pub struct SerializingPage<'a> { | |||

| summary: &'a Option<String>, | |||

| word_count: Option<usize>, | |||

| reading_time: Option<usize>, | |||

| toc: &'a [Header], | |||

| assets: &'a [String], | |||

| draft: bool, | |||

| lang: &'a str, | |||

| lighter: Option<Box<SerializingPage<'a>>>, | |||

| heavier: Option<Box<SerializingPage<'a>>>, | |||

| earlier: Option<Box<SerializingPage<'a>>>, | |||

| later: Option<Box<SerializingPage<'a>>>, | |||

| translations: Vec<TranslatedContent<'a>>, | |||

| } | |||

| impl<'a> SerializingPage<'a> { | |||

| @@ -66,6 +106,8 @@ impl<'a> SerializingPage<'a> { | |||

| .map(|k| library.get_section_by_key(*k).file.relative.clone()) | |||

| .collect(); | |||

| let translations = TranslatedContent::find_all_pages(page, library); | |||

| SerializingPage { | |||

| relative_path: &page.file.relative, | |||

| ancestors, | |||

| @@ -85,13 +127,14 @@ impl<'a> SerializingPage<'a> { | |||

| summary: &page.summary, | |||

| word_count: page.word_count, | |||

| reading_time: page.reading_time, | |||

| toc: &page.toc, | |||

| assets: &page.serialized_assets, | |||

| draft: page.is_draft(), | |||

| lang: &page.lang, | |||

| lighter, | |||

| heavier, | |||

| earlier, | |||

| later, | |||

| translations, | |||

| } | |||

| } | |||

| @@ -114,6 +157,12 @@ impl<'a> SerializingPage<'a> { | |||

| vec![] | |||

| }; | |||

| let translations = if let Some(ref lib) = library { | |||

| TranslatedContent::find_all_pages(page, lib) | |||

| } else { | |||

| vec![] | |||

| }; | |||

| SerializingPage { | |||

| relative_path: &page.file.relative, | |||

| ancestors, | |||

| @@ -133,13 +182,14 @@ impl<'a> SerializingPage<'a> { | |||

| summary: &page.summary, | |||

| word_count: page.word_count, | |||

| reading_time: page.reading_time, | |||

| toc: &page.toc, | |||

| assets: &page.serialized_assets, | |||

| draft: page.is_draft(), | |||

| lang: &page.lang, | |||

| lighter: None, | |||

| heavier: None, | |||

| earlier: None, | |||

| later: None, | |||

| translations, | |||

| } | |||

| } | |||

| } | |||

| @@ -157,10 +207,11 @@ pub struct SerializingSection<'a> { | |||

| components: &'a [String], | |||

| word_count: Option<usize>, | |||

| reading_time: Option<usize>, | |||

| toc: &'a [Header], | |||

| lang: &'a str, | |||

| assets: &'a [String], | |||

| pages: Vec<SerializingPage<'a>>, | |||

| subsections: Vec<&'a str>, | |||

| translations: Vec<TranslatedContent<'a>>, | |||

| } | |||

| impl<'a> SerializingSection<'a> { | |||

| @@ -169,7 +220,7 @@ impl<'a> SerializingSection<'a> { | |||

| let mut subsections = Vec::with_capacity(section.subsections.len()); | |||

| for k in §ion.pages { | |||

| pages.push(library.get_page_by_key(*k).to_serialized(library)); | |||

| pages.push(library.get_page_by_key(*k).to_serialized_basic(library)); | |||

| } | |||

| for k in §ion.subsections { | |||

| @@ -181,6 +232,7 @@ impl<'a> SerializingSection<'a> { | |||

| .iter() | |||

| .map(|k| library.get_section_by_key(*k).file.relative.clone()) | |||