No known key found for this signature in database

GPG Key ID: 4AEE18F83AFDEB23

100 changed files with 4400 additions and 1828 deletions

Unified View

Diff Options

-

+1 -1.travis.yml

-

+24 -0CHANGELOG.md

-

+1485 -375Cargo.lock

-

+4 -4Cargo.toml

-

+10 -6README.md

-

+1 -1appveyor.yml

-

+42 -7components/config/src/lib.rs

-

+2 -2components/config/src/theme.rs

-

+2 -1components/content/Cargo.toml

-

+6 -6components/content/benches/all.rs

-

+3 -2components/content/src/lib.rs

-

+64 -29components/content/src/page.rs

-

+9 -6components/content/src/section.rs

-

+57 -126components/content/src/sorting.rs

-

+2 -1components/errors/Cargo.toml

-

+3 -1components/errors/src/lib.rs

-

+0 -1components/front_matter/Cargo.toml

-

+0 -3components/front_matter/src/lib.rs

-

+25 -50components/front_matter/src/page.rs

-

+0 -0components/highlighting/examples/generate_sublime.rs

-

+16 -3components/highlighting/src/lib.rs

-

+14 -0components/imageproc/Cargo.toml

-

+384 -0components/imageproc/src/lib.rs

-

+8 -0components/link_checker/Cargo.toml

-

+88 -0components/link_checker/src/lib.rs

-

+1 -0components/pagination/Cargo.toml

-

+121 -27components/pagination/src/lib.rs

-

+1 -1components/rebuild/Cargo.toml

-

+37 -58components/rebuild/src/lib.rs

-

+9 -9components/rebuild/tests/rebuild.rs

-

+6 -3components/rendering/Cargo.toml

-

+40 -9components/rendering/benches/all.rs

-

+72 -0components/rendering/src/content.pest

-

+16 -19components/rendering/src/context.rs

-

+22 -6components/rendering/src/lib.rs

-

+156 -236components/rendering/src/markdown.rs

-

+0 -190components/rendering/src/short_code.rs

-

+362 -0components/rendering/src/shortcode.rs

-

+8 -10components/rendering/src/table_of_contents.rs

-

+208 -72components/rendering/tests/markdown.rs

-

+2 -1components/site/Cargo.toml

-

+7 -7components/site/benches/render.rs

-

+2 -2components/site/benches/site.rs

-

+172 -110components/site/src/lib.rs

-

+25 -72components/site/tests/site.rs

-

+117 -81components/taxonomies/src/lib.rs

-

+1 -0components/templates/Cargo.toml

-

+10 -0components/templates/src/builtins/404.html

-

+4 -4components/templates/src/builtins/rss.xml

-

+4 -7components/templates/src/builtins/sitemap.xml

-

+1 -1components/templates/src/filters.rs

-

+178 -39components/templates/src/global_fns.rs

-

+2 -1components/templates/src/lib.rs

-

+2 -2components/utils/Cargo.toml

-

+29 -3components/utils/src/fs.rs

-

+3 -1components/utils/src/lib.rs

-

+14 -0components/utils/src/net.rs

-

+3 -3components/utils/src/site.rs

-

+6 -6components/utils/src/templates.rs

-

+1 -0docs/config.toml

-

BINdocs/content/documentation/content/image-processing/example-00.jpg

-

BINdocs/content/documentation/content/image-processing/example-01.jpg

-

BINdocs/content/documentation/content/image-processing/example-02.jpg

-

BINdocs/content/documentation/content/image-processing/example-03.jpg

-

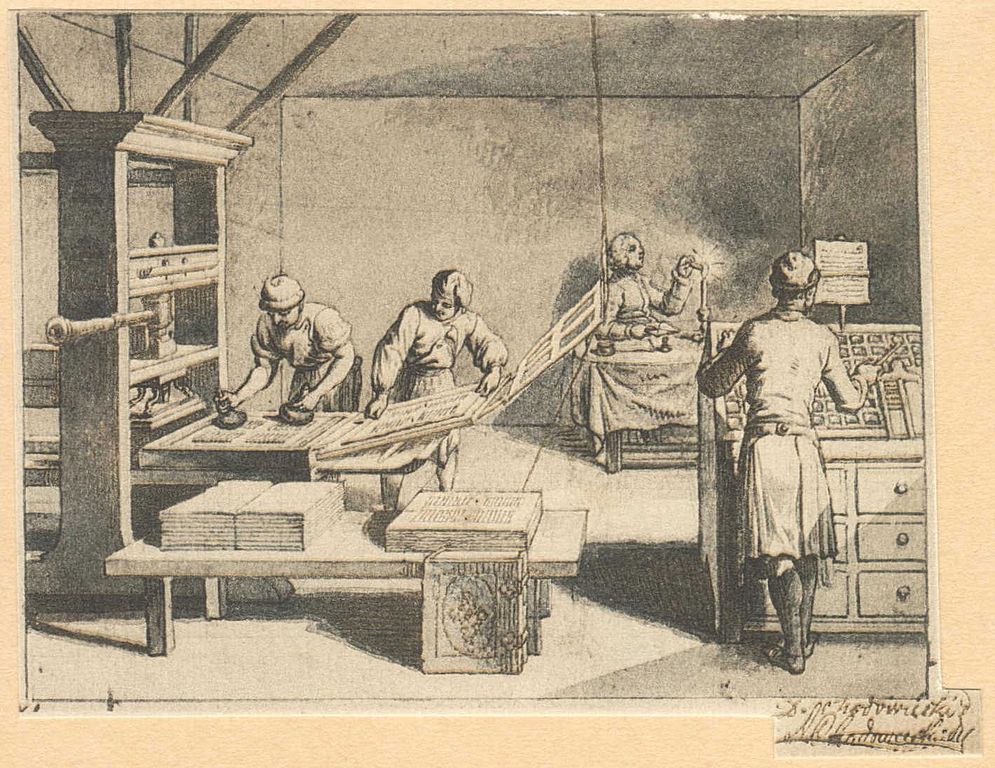

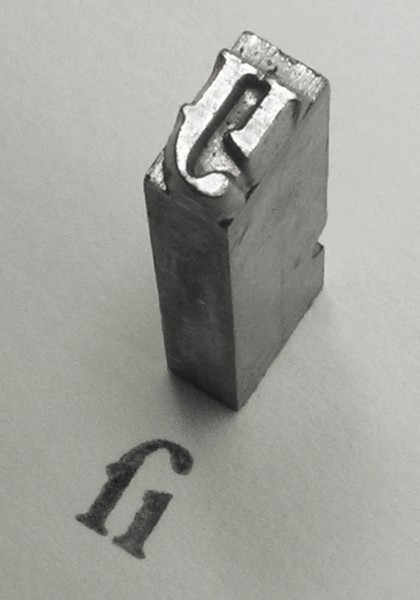

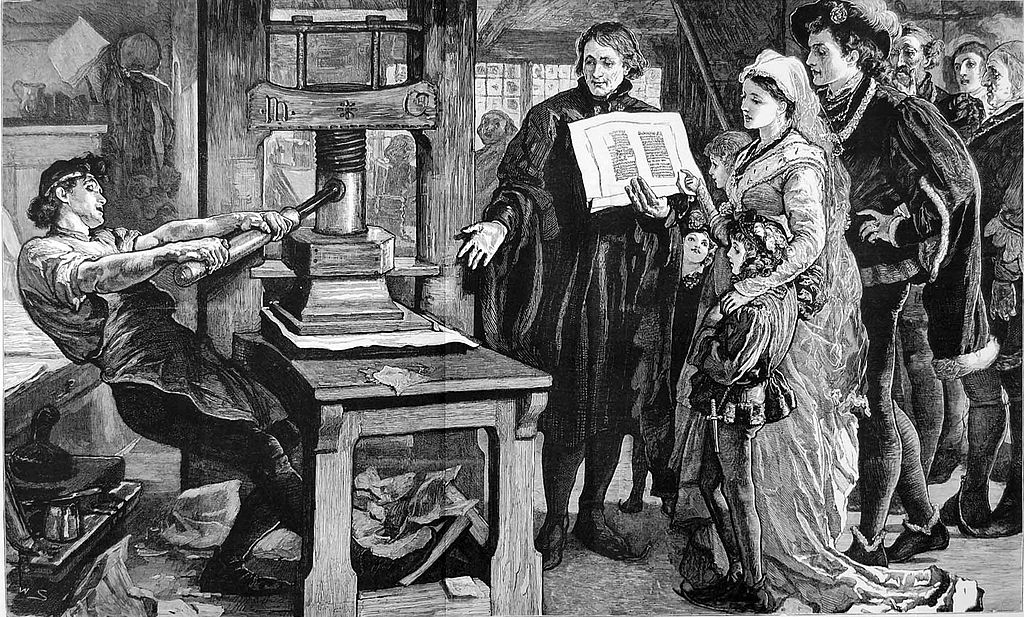

BINdocs/content/documentation/content/image-processing/gutenberg.jpg

-

+134 -0docs/content/documentation/content/image-processing/index.md

-

+22 -21docs/content/documentation/content/page.md

-

+25 -59docs/content/documentation/content/section.md

-

+42 -29docs/content/documentation/content/shortcodes.md

-

+0 -23docs/content/documentation/content/tags-categories.md

-

+36 -0docs/content/documentation/content/taxonomies.md

-

+15 -7docs/content/documentation/getting-started/configuration.md

-

+8 -0docs/content/documentation/templates/404.md

-

+23 -0docs/content/documentation/templates/archive.md

-

+24 -13docs/content/documentation/templates/overview.md

-

+14 -5docs/content/documentation/templates/pages-sections.md

-

+7 -2docs/content/documentation/templates/pagination.md

-

+0 -31docs/content/documentation/templates/tags-categories.md

-

+51 -0docs/content/documentation/templates/taxonomies.md

-

BINdocs/static/_processed_images/0478482c742970ac00.jpg

-

BINdocs/static/_processed_images/2b6a3e5a28bab1f100.jpg

-

BINdocs/static/_processed_images/3dba59a146f3bc0900.jpg

-

BINdocs/static/_processed_images/5e399fa94c88057a00.jpg

-

BINdocs/static/_processed_images/63d5c27341a9885c00.jpg

-

BINdocs/static/_processed_images/63fe884d13fd318d00.jpg

-

BINdocs/static/_processed_images/8b446e542d0b692d00.jpg

-

BINdocs/static/_processed_images/ab39b603591b3e3300.jpg

-

BINdocs/static/_processed_images/d91d0751df06edce00.jpg

-

BINdocs/static/_processed_images/e690cdfaf053bbd700.jpg

-

+8 -0docs/templates/shortcodes/gallery.html

-

+1 -0docs/templates/shortcodes/resize_image.html

-

+85 -24src/cmd/serve.rs

-

+3 -2src/console.rs

-

+2 -4src/main.rs

-

BINsublime_syntaxes/newlines.packdump

-

BINsublime_syntaxes/nonewlines.packdump

-

+2 -1test_site/config.staging.toml

-

+4 -0test_site/config.toml

-

+1 -1test_site/content/posts/fixed-slug.md

-

+1 -1test_site/content/posts/tutorials/devops/_index.md

+ 1

- 1

.travis.yml

View File

| @@ -21,7 +21,7 @@ matrix: | |||||

| rust: nightly | rust: nightly | ||||

| # The earliest stable Rust version that works | # The earliest stable Rust version that works | ||||

| - env: TARGET=x86_64-unknown-linux-gnu | - env: TARGET=x86_64-unknown-linux-gnu | ||||

| rust: 1.23.0 | |||||

| rust: 1.27.0 | |||||

| before_install: set -e | before_install: set -e | ||||

+ 24

- 0

CHANGELOG.md

View File

| @@ -1,5 +1,29 @@ | |||||

| # Changelog | # Changelog | ||||

| ## 0.4.0 (unreleased) | |||||

| ### Breaking | |||||

| - Taxonomies have been rewritten from scratch to allow custom ones with RSS and pagination | |||||

| - `order` sorting has been removed in favour of only having `weight` | |||||

| - `page.next/page.previous` have been renamed to `page.later/page.earlier` and `page.heavier/page.lighter` depending on the sort method | |||||

| ### Others | |||||

| - Fix `serve` not working with the config flag | |||||

| - Websocket port on `live` will not get the first available port instead of a fixed one | |||||

| - Rewrite markdown rendering to fix all known issues with shortcodes | |||||

| - Add array arguments to shortcodes and allow single-quote/backtick strings | |||||

| - Co-located assets are now permalinks | |||||

| - Words are now counted using unicode rather than whitespaces | |||||

| - Aliases can now be pointing directly to specific HTML files | |||||

| - Add `year`, `month` and `day` variables to pages with a date | |||||

| - Fix panic when live reloading a change on a file without extensions | |||||

| - Add image resizing support | |||||

| - Add a 404 template | |||||

| - Enable preserve-order feature of Tera | |||||

| - Add an external link checker | |||||

| - Add `get_taxonomy` global function to return the full taxonomy | |||||

| ## 0.3.4 (2018-06-22) | ## 0.3.4 (2018-06-22) | ||||

| - `cargo update` as some dependencies didn't compile with current Rust version | - `cargo update` as some dependencies didn't compile with current Rust version | ||||

+ 1485

- 375

Cargo.lock

File diff suppressed because it is too large

View File

+ 4

- 4

Cargo.toml

View File

| @@ -1,6 +1,6 @@ | |||||

| [package] | [package] | ||||

| name = "gutenberg" | name = "gutenberg" | ||||

| version = "0.3.4" | |||||

| version = "0.4.0" | |||||

| authors = ["Vincent Prouillet <prouillet.vincent@gmail.com>"] | authors = ["Vincent Prouillet <prouillet.vincent@gmail.com>"] | ||||

| license = "MIT" | license = "MIT" | ||||

| readme = "README.md" | readme = "README.md" | ||||

| @@ -24,9 +24,7 @@ term-painter = "0.2" | |||||

| # Used in init to ensure the url given as base_url is a valid one | # Used in init to ensure the url given as base_url is a valid one | ||||

| url = "1.5" | url = "1.5" | ||||

| # Below is for the serve cmd | # Below is for the serve cmd | ||||

| staticfile = "0.5" | |||||

| iron = "0.6" | |||||

| mount = "0.4" | |||||

| actix-web = { version = "0.7", default-features = false, features = [] } | |||||

| notify = "4" | notify = "4" | ||||

| ws = "0.7" | ws = "0.7" | ||||

| ctrlc = "3" | ctrlc = "3" | ||||

| @@ -53,4 +51,6 @@ members = [ | |||||

| "components/templates", | "components/templates", | ||||

| "components/utils", | "components/utils", | ||||

| "components/search", | "components/search", | ||||

| "components/imageproc", | |||||

| "components/link_checker", | |||||

| ] | ] | ||||

+ 10

- 6

README.md

View File

| @@ -14,21 +14,24 @@ in the `docs/content` folder of the repository. | |||||

| | Single binary | ✔ | ✔ | ✔ | ✕ | | | Single binary | ✔ | ✔ | ✔ | ✕ | | ||||

| | Language | Rust | Rust | Go | Python | | | Language | Rust | Rust | Go | Python | | ||||

| | Syntax highlighting | ✔ | ✔ | ✔ | ✔ | | | Syntax highlighting | ✔ | ✔ | ✔ | ✔ | | ||||

| | Sass compilation | ✔ | ✕ | ✔ | ✔ | | |||||

| | Sass compilation | ✔ | ✔ | ✔ | ✔ | | |||||

| | Assets co-location | ✔ | ✔ | ✔ | ✔ | | | Assets co-location | ✔ | ✔ | ✔ | ✔ | | ||||

| | i18n | ✕ | ✕ | ✔ | ✔ | | | i18n | ✕ | ✕ | ✔ | ✔ | | ||||

| | Image processing | ✕ | ✕ | ✔ | ✔ | | |||||

| | Image processing | ✔ | ✕ | ✔ | ✔ | | |||||

| | Sane template engine | ✔ | ✔ | ✕✕✕ | ✔ | | | Sane template engine | ✔ | ✔ | ✕✕✕ | ✔ | | ||||

| | Themes | ✔ | ✕ | ✔ | ✔ | | | Themes | ✔ | ✕ | ✔ | ✔ | | ||||

| | Shortcodes | ✔ | ✕ | ✔ | ✔ | | | Shortcodes | ✔ | ✕ | ✔ | ✔ | | ||||

| | Internal links | ✔ | ✕ | ✔ | ✔ | | | Internal links | ✔ | ✕ | ✔ | ✔ | | ||||

| | Link checker | ✔ | ✕ | ✕ | ✔ | | |||||

| | Table of contents | ✔ | ✕ | ✔ | ✔ | | | Table of contents | ✔ | ✕ | ✔ | ✔ | | ||||

| | Automatic header anchors | ✔ | ✕ | ✔ | ✔ | | | Automatic header anchors | ✔ | ✕ | ✔ | ✔ | | ||||

| | Aliases | ✔ | ✕ | ✔ | ✔ | | | Aliases | ✔ | ✕ | ✔ | ✔ | | ||||

| | Pagination | ✔ | ✕ | ✔ | ✔ | | | Pagination | ✔ | ✕ | ✔ | ✔ | | ||||

| | Custom taxonomies | ✕ | ✕ | ✔ | ✕ | | |||||

| | Custom taxonomies | ✔ | ✕ | ✔ | ✕ | | |||||

| | Search | ✔ | ✕ | ✕ | ✔ | | | Search | ✔ | ✕ | ✕ | ✔ | | ||||

| | Data files | ✕ | ✔ | ✔ | ✕ | | | Data files | ✕ | ✔ | ✔ | ✕ | | ||||

| | LiveReload | ✔ | ✕ | ✔ | ✔ | | |||||

| | Netlify support | ✔ | ✕ | ✔ | ✕ | | |||||

| Supported content formats: | Supported content formats: | ||||

| @@ -38,7 +41,8 @@ Supported content formats: | |||||

| - Pelican: reStructuredText, markdown, asciidoc, org-mode, whatever-you-want | - Pelican: reStructuredText, markdown, asciidoc, org-mode, whatever-you-want | ||||

| Note that many features of Pelican are coming from plugins, which might be tricky | Note that many features of Pelican are coming from plugins, which might be tricky | ||||

| to use because of version mismatch or lacking documentation. | |||||

| to use because of version mismatch or lacking documentation. Netlify supports Python | |||||

| and Pipenv but you still need to install your dependencies manually. | |||||

| ## Contributing | ## Contributing | ||||

| As the documentation site is automatically built on commits to master, all development | As the documentation site is automatically built on commits to master, all development | ||||

| @@ -52,7 +56,7 @@ If you want a feature added or modified, please open an issue to discuss it befo | |||||

| Syntax highlighting depends on submodules so ensure you load them first: | Syntax highlighting depends on submodules so ensure you load them first: | ||||

| ```bash | ```bash | ||||

| $ git submodule update --init | |||||

| $ git submodule update --init | |||||

| ``` | ``` | ||||

| Gutenberg only works with syntaxes in the `.sublime-syntax` format. If your syntax | Gutenberg only works with syntaxes in the `.sublime-syntax` format. If your syntax | ||||

| @@ -75,7 +79,7 @@ You can check for any updates to the current packages by running: | |||||

| $ git submodule update --remote --merge | $ git submodule update --remote --merge | ||||

| ``` | ``` | ||||

| And finally from the root of the components/rendering crate run the following command: | |||||

| And finally from the root of the components/highlighting crate run the following command: | |||||

| ```bash | ```bash | ||||

| $ cargo run --example generate_sublime synpack ../../sublime_syntaxes ../../sublime_syntaxes/newlines.packdump ../../sublime_syntaxes/nonewlines.packdump | $ cargo run --example generate_sublime synpack ../../sublime_syntaxes ../../sublime_syntaxes/newlines.packdump ../../sublime_syntaxes/nonewlines.packdump | ||||

+ 1

- 1

appveyor.yml

View File

| @@ -10,7 +10,7 @@ environment: | |||||

| matrix: | matrix: | ||||

| - target: x86_64-pc-windows-msvc | - target: x86_64-pc-windows-msvc | ||||

| RUST_VERSION: 1.25.0 | |||||

| RUST_VERSION: 1.27.0 | |||||

| - target: x86_64-pc-windows-msvc | - target: x86_64-pc-windows-msvc | ||||

| RUST_VERSION: stable | RUST_VERSION: stable | ||||

+ 42

- 7

components/config/src/lib.rs

View File

| @@ -12,7 +12,7 @@ use std::fs::File; | |||||

| use std::io::prelude::*; | use std::io::prelude::*; | ||||

| use std::path::{Path, PathBuf}; | use std::path::{Path, PathBuf}; | ||||

| use toml::{Value as Toml}; | |||||

| use toml::Value as Toml; | |||||

| use chrono::Utc; | use chrono::Utc; | ||||

| use globset::{Glob, GlobSet, GlobSetBuilder}; | use globset::{Glob, GlobSet, GlobSetBuilder}; | ||||

| @@ -28,6 +28,40 @@ use theme::Theme; | |||||

| static DEFAULT_BASE_URL: &'static str = "http://a-website.com"; | static DEFAULT_BASE_URL: &'static str = "http://a-website.com"; | ||||

| #[derive(Clone, Debug, PartialEq, Eq, Serialize, Deserialize)] | |||||

| #[serde(default)] | |||||

| pub struct Taxonomy { | |||||

| /// The name used in the URL, usually the plural | |||||

| pub name: String, | |||||

| /// If this is set, the list of individual taxonomy term page will be paginated | |||||

| /// by this much | |||||

| pub paginate_by: Option<usize>, | |||||

| pub paginate_path: Option<String>, | |||||

| /// Whether to generate a RSS feed only for each taxonomy term, defaults to false | |||||

| pub rss: bool, | |||||

| } | |||||

| impl Taxonomy { | |||||

| pub fn is_paginated(&self) -> bool { | |||||

| if let Some(paginate_by) = self.paginate_by { | |||||

| paginate_by > 0 | |||||

| } else { | |||||

| false | |||||

| } | |||||

| } | |||||

| } | |||||

| impl Default for Taxonomy { | |||||

| fn default() -> Taxonomy { | |||||

| Taxonomy { | |||||

| name: String::new(), | |||||

| paginate_by: None, | |||||

| paginate_path: None, | |||||

| rss: false, | |||||

| } | |||||

| } | |||||

| } | |||||

| #[derive(Clone, Debug, Serialize, Deserialize)] | #[derive(Clone, Debug, Serialize, Deserialize)] | ||||

| #[serde(default)] | #[serde(default)] | ||||

| pub struct Config { | pub struct Config { | ||||

| @@ -56,10 +90,8 @@ pub struct Config { | |||||

| pub generate_rss: bool, | pub generate_rss: bool, | ||||

| /// The number of articles to include in the RSS feed. Defaults to 10_000 | /// The number of articles to include in the RSS feed. Defaults to 10_000 | ||||

| pub rss_limit: usize, | pub rss_limit: usize, | ||||

| /// Whether to generate tags and individual tag pages if some pages have them. Defaults to true | |||||

| pub generate_tags_pages: bool, | |||||

| /// Whether to generate categories and individual tag categories if some pages have them. Defaults to true | |||||

| pub generate_categories_pages: bool, | |||||

| pub taxonomies: Vec<Taxonomy>, | |||||

| /// Whether to compile the `sass` directory and output the css files into the static folder | /// Whether to compile the `sass` directory and output the css files into the static folder | ||||

| pub compile_sass: bool, | pub compile_sass: bool, | ||||

| @@ -72,6 +104,9 @@ pub struct Config { | |||||

| #[serde(skip_serializing, skip_deserializing)] // not a typo, 2 are needed | #[serde(skip_serializing, skip_deserializing)] // not a typo, 2 are needed | ||||

| pub ignored_content_globset: Option<GlobSet>, | pub ignored_content_globset: Option<GlobSet>, | ||||

| /// Whether to check all external links for validity | |||||

| pub check_external_links: bool, | |||||

| /// All user params set in [extra] in the config | /// All user params set in [extra] in the config | ||||

| pub extra: HashMap<String, Toml>, | pub extra: HashMap<String, Toml>, | ||||

| @@ -191,9 +226,9 @@ impl Default for Config { | |||||

| default_language: "en".to_string(), | default_language: "en".to_string(), | ||||

| generate_rss: false, | generate_rss: false, | ||||

| rss_limit: 10_000, | rss_limit: 10_000, | ||||

| generate_tags_pages: true, | |||||

| generate_categories_pages: true, | |||||

| taxonomies: Vec::new(), | |||||

| compile_sass: false, | compile_sass: false, | ||||

| check_external_links: false, | |||||

| build_search_index: false, | build_search_index: false, | ||||

| ignored_content: Vec::new(), | ignored_content: Vec::new(), | ||||

| ignored_content_globset: None, | ignored_content_globset: None, | ||||

+ 2

- 2

components/config/src/theme.rs

View File

| @@ -3,7 +3,7 @@ use std::fs::File; | |||||

| use std::io::prelude::*; | use std::io::prelude::*; | ||||

| use std::path::PathBuf; | use std::path::PathBuf; | ||||

| use toml::{Value as Toml}; | |||||

| use toml::Value as Toml; | |||||

| use errors::{Result, ResultExt}; | use errors::{Result, ResultExt}; | ||||

| @@ -37,7 +37,7 @@ impl Theme { | |||||

| } | } | ||||

| Ok(Theme {extra}) | |||||

| Ok(Theme { extra }) | |||||

| } | } | ||||

| /// Parses a theme file from the given path | /// Parses a theme file from the given path | ||||

+ 2

- 1

components/content/Cargo.toml

View File

| @@ -8,6 +8,7 @@ tera = "0.11" | |||||

| serde = "1" | serde = "1" | ||||

| slug = "0.1" | slug = "0.1" | ||||

| rayon = "1" | rayon = "1" | ||||

| chrono = "0.4" | |||||

| errors = { path = "../errors" } | errors = { path = "../errors" } | ||||

| config = { path = "../config" } | config = { path = "../config" } | ||||

| @@ -16,6 +17,6 @@ rendering = { path = "../rendering" } | |||||

| front_matter = { path = "../front_matter" } | front_matter = { path = "../front_matter" } | ||||

| [dev-dependencies] | [dev-dependencies] | ||||

| tempdir = "0.3" | |||||

| tempfile = "3" | |||||

| toml = "0.4" | toml = "0.4" | ||||

| globset = "0.4" | globset = "0.4" | ||||

+ 6

- 6

components/content/benches/all.rs

View File

| @@ -11,7 +11,7 @@ use std::collections::HashMap; | |||||

| use config::Config; | use config::Config; | ||||

| use tera::Tera; | use tera::Tera; | ||||

| use front_matter::{SortBy, InsertAnchor}; | use front_matter::{SortBy, InsertAnchor}; | ||||

| use content::{Page, sort_pages, populate_previous_and_next_pages}; | |||||

| use content::{Page, sort_pages, populate_siblings}; | |||||

| fn create_pages(number: usize, sort_by: SortBy) -> Vec<Page> { | fn create_pages(number: usize, sort_by: SortBy) -> Vec<Page> { | ||||

| @@ -23,8 +23,8 @@ fn create_pages(number: usize, sort_by: SortBy) -> Vec<Page> { | |||||

| for i in 0..number { | for i in 0..number { | ||||

| let mut page = Page::default(); | let mut page = Page::default(); | ||||

| match sort_by { | match sort_by { | ||||

| SortBy::Weight => { page.meta.weight = Some(i); }, | |||||

| SortBy::Order => { page.meta.order = Some(i); }, | |||||

| SortBy::Weight => { page.meta.weight = Some(i); } | |||||

| SortBy::Order => { page.meta.order = Some(i); } | |||||

| _ => (), | _ => (), | ||||

| }; | }; | ||||

| page.raw_content = r#" | page.raw_content = r#" | ||||

| @@ -128,17 +128,17 @@ fn bench_sorting_order(b: &mut test::Bencher) { | |||||

| } | } | ||||

| #[bench] | #[bench] | ||||

| fn bench_populate_previous_and_next_pages(b: &mut test::Bencher) { | |||||

| fn bench_populate_siblings(b: &mut test::Bencher) { | |||||

| let pages = create_pages(250, SortBy::Order); | let pages = create_pages(250, SortBy::Order); | ||||

| let (sorted_pages, _) = sort_pages(pages, SortBy::Order); | let (sorted_pages, _) = sort_pages(pages, SortBy::Order); | ||||

| b.iter(|| populate_previous_and_next_pages(&sorted_pages.clone())); | |||||

| b.iter(|| populate_siblings(&sorted_pages.clone())); | |||||

| } | } | ||||

| #[bench] | #[bench] | ||||

| fn bench_page_render_html(b: &mut test::Bencher) { | fn bench_page_render_html(b: &mut test::Bencher) { | ||||

| let pages = create_pages(10, SortBy::Order); | let pages = create_pages(10, SortBy::Order); | ||||

| let (mut sorted_pages, _) = sort_pages(pages, SortBy::Order); | let (mut sorted_pages, _) = sort_pages(pages, SortBy::Order); | ||||

| sorted_pages = populate_previous_and_next_pages(&sorted_pages); | |||||

| sorted_pages = populate_siblings(&sorted_pages); | |||||

| let config = Config::default(); | let config = Config::default(); | ||||

| let mut tera = Tera::default(); | let mut tera = Tera::default(); | ||||

+ 3

- 2

components/content/src/lib.rs

View File

| @@ -2,6 +2,7 @@ extern crate tera; | |||||

| extern crate slug; | extern crate slug; | ||||

| extern crate serde; | extern crate serde; | ||||

| extern crate rayon; | extern crate rayon; | ||||

| extern crate chrono; | |||||

| extern crate errors; | extern crate errors; | ||||

| extern crate config; | extern crate config; | ||||

| @@ -10,7 +11,7 @@ extern crate rendering; | |||||

| extern crate utils; | extern crate utils; | ||||

| #[cfg(test)] | #[cfg(test)] | ||||

| extern crate tempdir; | |||||

| extern crate tempfile; | |||||

| #[cfg(test)] | #[cfg(test)] | ||||

| extern crate toml; | extern crate toml; | ||||

| #[cfg(test)] | #[cfg(test)] | ||||

| @@ -25,4 +26,4 @@ mod sorting; | |||||

| pub use file_info::FileInfo; | pub use file_info::FileInfo; | ||||

| pub use page::Page; | pub use page::Page; | ||||

| pub use section::Section; | pub use section::Section; | ||||

| pub use sorting::{sort_pages, populate_previous_and_next_pages}; | |||||

| pub use sorting::{sort_pages, populate_siblings}; | |||||

+ 64

- 29

components/content/src/page.rs

View File

| @@ -3,7 +3,7 @@ use std::collections::HashMap; | |||||

| use std::path::{Path, PathBuf}; | use std::path::{Path, PathBuf}; | ||||

| use std::result::Result as StdResult; | use std::result::Result as StdResult; | ||||

| use chrono::Datelike; | |||||

| use tera::{Tera, Context as TeraContext}; | use tera::{Tera, Context as TeraContext}; | ||||

| use serde::ser::{SerializeStruct, self}; | use serde::ser::{SerializeStruct, self}; | ||||

| use slug::slugify; | use slug::slugify; | ||||

| @@ -14,7 +14,7 @@ use utils::fs::{read_file, find_related_assets}; | |||||

| use utils::site::get_reading_analytics; | use utils::site::get_reading_analytics; | ||||

| use utils::templates::render_template; | use utils::templates::render_template; | ||||

| use front_matter::{PageFrontMatter, InsertAnchor, split_page_content}; | use front_matter::{PageFrontMatter, InsertAnchor, split_page_content}; | ||||

| use rendering::{Context, Header, markdown_to_html}; | |||||

| use rendering::{RenderContext, Header, render_content}; | |||||

| use file_info::FileInfo; | use file_info::FileInfo; | ||||

| @@ -44,10 +44,14 @@ pub struct Page { | |||||

| /// When <!-- more --> is found in the text, will take the content up to that part | /// When <!-- more --> is found in the text, will take the content up to that part | ||||

| /// as summary | /// as summary | ||||

| pub summary: Option<String>, | pub summary: Option<String>, | ||||

| /// The previous page, by whatever sorting is used for the index/section | |||||

| pub previous: Option<Box<Page>>, | |||||

| /// The next page, by whatever sorting is used for the index/section | |||||

| pub next: Option<Box<Page>>, | |||||

| /// The earlier page, for pages sorted by date | |||||

| pub earlier: Option<Box<Page>>, | |||||

| /// The later page, for pages sorted by date | |||||

| pub later: Option<Box<Page>>, | |||||

| /// The lighter page, for pages sorted by weight | |||||

| pub lighter: Option<Box<Page>>, | |||||

| /// The heavier page, for pages sorted by weight | |||||

| pub heavier: Option<Box<Page>>, | |||||

| /// Toc made from the headers of the markdown file | /// Toc made from the headers of the markdown file | ||||

| pub toc: Vec<Header>, | pub toc: Vec<Header>, | ||||

| } | } | ||||

| @@ -68,8 +72,10 @@ impl Page { | |||||

| components: vec![], | components: vec![], | ||||

| permalink: "".to_string(), | permalink: "".to_string(), | ||||

| summary: None, | summary: None, | ||||

| previous: None, | |||||

| next: None, | |||||

| earlier: None, | |||||

| later: None, | |||||

| lighter: None, | |||||

| heavier: None, | |||||

| toc: vec![], | toc: vec![], | ||||

| } | } | ||||

| } | } | ||||

| @@ -156,27 +162,32 @@ impl Page { | |||||

| } | } | ||||

| Ok(page) | Ok(page) | ||||

| } | } | ||||

| /// We need access to all pages url to render links relative to content | /// We need access to all pages url to render links relative to content | ||||

| /// so that can't happen at the same time as parsing | /// so that can't happen at the same time as parsing | ||||

| pub fn render_markdown(&mut self, permalinks: &HashMap<String, String>, tera: &Tera, config: &Config, anchor_insert: InsertAnchor) -> Result<()> { | pub fn render_markdown(&mut self, permalinks: &HashMap<String, String>, tera: &Tera, config: &Config, anchor_insert: InsertAnchor) -> Result<()> { | ||||

| let context = Context::new( | |||||

| let mut context = RenderContext::new( | |||||

| tera, | tera, | ||||

| config.highlight_code, | |||||

| config.highlight_theme.clone(), | |||||

| config, | |||||

| &self.permalink, | &self.permalink, | ||||

| permalinks, | permalinks, | ||||

| anchor_insert | |||||

| anchor_insert, | |||||

| ); | ); | ||||

| let res = markdown_to_html(&self.raw_content.replacen("<!-- more -->", "<a name=\"continue-reading\"></a>", 1), &context)?; | |||||

| context.tera_context.add("page", self); | |||||

| let res = render_content( | |||||

| &self.raw_content.replacen("<!-- more -->", "<a name=\"continue-reading\"></a>", 1), | |||||

| &context, | |||||

| ).chain_err(|| format!("Failed to render content of {}", self.file.path.display()))?; | |||||

| self.content = res.0; | self.content = res.0; | ||||

| self.toc = res.1; | self.toc = res.1; | ||||

| if self.raw_content.contains("<!-- more -->") { | if self.raw_content.contains("<!-- more -->") { | ||||

| self.summary = Some({ | self.summary = Some({ | ||||

| let summary = self.raw_content.splitn(2, "<!-- more -->").collect::<Vec<&str>>()[0]; | let summary = self.raw_content.splitn(2, "<!-- more -->").collect::<Vec<&str>>()[0]; | ||||

| markdown_to_html(summary, &context)?.0 | |||||

| render_content(summary, &context) | |||||

| .chain_err(|| format!("Failed to render content of {}", self.file.path.display()))?.0 | |||||

| }) | }) | ||||

| } | } | ||||

| @@ -199,6 +210,15 @@ impl Page { | |||||

| render_template(&tpl_name, tera, &context, &config.theme) | render_template(&tpl_name, tera, &context, &config.theme) | ||||

| .chain_err(|| format!("Failed to render page '{}'", self.file.path.display())) | .chain_err(|| format!("Failed to render page '{}'", self.file.path.display())) | ||||

| } | } | ||||

| /// Creates a vectors of asset URLs. | |||||

| fn serialize_assets(&self) -> Vec<String> { | |||||

| self.assets.iter() | |||||

| .filter_map(|asset| asset.file_name()) | |||||

| .filter_map(|filename| filename.to_str()) | |||||

| .map(|filename| self.path.clone() + filename) | |||||

| .collect() | |||||

| } | |||||

| } | } | ||||

| impl Default for Page { | impl Default for Page { | ||||

| @@ -214,8 +234,10 @@ impl Default for Page { | |||||

| components: vec![], | components: vec![], | ||||

| permalink: "".to_string(), | permalink: "".to_string(), | ||||

| summary: None, | summary: None, | ||||

| previous: None, | |||||

| next: None, | |||||

| earlier: None, | |||||

| later: None, | |||||

| lighter: None, | |||||

| heavier: None, | |||||

| toc: vec![], | toc: vec![], | ||||

| } | } | ||||

| } | } | ||||

| @@ -223,26 +245,39 @@ impl Default for Page { | |||||

| impl ser::Serialize for Page { | impl ser::Serialize for Page { | ||||

| fn serialize<S>(&self, serializer: S) -> StdResult<S::Ok, S::Error> where S: ser::Serializer { | fn serialize<S>(&self, serializer: S) -> StdResult<S::Ok, S::Error> where S: ser::Serializer { | ||||

| let mut state = serializer.serialize_struct("page", 18)?; | |||||

| let mut state = serializer.serialize_struct("page", 20)?; | |||||

| state.serialize_field("content", &self.content)?; | state.serialize_field("content", &self.content)?; | ||||

| state.serialize_field("title", &self.meta.title)?; | state.serialize_field("title", &self.meta.title)?; | ||||

| state.serialize_field("description", &self.meta.description)?; | state.serialize_field("description", &self.meta.description)?; | ||||

| state.serialize_field("date", &self.meta.date)?; | state.serialize_field("date", &self.meta.date)?; | ||||

| if let Some(chrono_datetime) = self.meta.date() { | |||||

| let d = chrono_datetime.date(); | |||||

| state.serialize_field("year", &d.year())?; | |||||

| state.serialize_field("month", &d.month())?; | |||||

| state.serialize_field("day", &d.day())?; | |||||

| } else { | |||||

| state.serialize_field::<Option<usize>>("year", &None)?; | |||||

| state.serialize_field::<Option<usize>>("month", &None)?; | |||||

| state.serialize_field::<Option<usize>>("day", &None)?; | |||||

| } | |||||

| state.serialize_field("slug", &self.slug)?; | state.serialize_field("slug", &self.slug)?; | ||||

| state.serialize_field("path", &self.path)?; | state.serialize_field("path", &self.path)?; | ||||

| state.serialize_field("components", &self.components)?; | state.serialize_field("components", &self.components)?; | ||||

| state.serialize_field("permalink", &self.permalink)?; | state.serialize_field("permalink", &self.permalink)?; | ||||

| state.serialize_field("summary", &self.summary)?; | state.serialize_field("summary", &self.summary)?; | ||||

| state.serialize_field("tags", &self.meta.tags)?; | |||||

| state.serialize_field("category", &self.meta.category)?; | |||||

| state.serialize_field("taxonomies", &self.meta.taxonomies)?; | |||||

| state.serialize_field("extra", &self.meta.extra)?; | state.serialize_field("extra", &self.meta.extra)?; | ||||

| let (word_count, reading_time) = get_reading_analytics(&self.raw_content); | let (word_count, reading_time) = get_reading_analytics(&self.raw_content); | ||||

| state.serialize_field("word_count", &word_count)?; | state.serialize_field("word_count", &word_count)?; | ||||

| state.serialize_field("reading_time", &reading_time)?; | state.serialize_field("reading_time", &reading_time)?; | ||||

| state.serialize_field("previous", &self.previous)?; | |||||

| state.serialize_field("next", &self.next)?; | |||||

| state.serialize_field("earlier", &self.earlier)?; | |||||

| state.serialize_field("later", &self.later)?; | |||||

| state.serialize_field("lighter", &self.lighter)?; | |||||

| state.serialize_field("heavier", &self.heavier)?; | |||||

| state.serialize_field("toc", &self.toc)?; | state.serialize_field("toc", &self.toc)?; | ||||

| state.serialize_field("draft", &self.is_draft())?; | state.serialize_field("draft", &self.is_draft())?; | ||||

| let assets = self.serialize_assets(); | |||||

| state.serialize_field("assets", &assets)?; | |||||

| state.end() | state.end() | ||||

| } | } | ||||

| } | } | ||||

| @@ -255,7 +290,7 @@ mod tests { | |||||

| use std::path::Path; | use std::path::Path; | ||||

| use tera::Tera; | use tera::Tera; | ||||

| use tempdir::TempDir; | |||||

| use tempfile::tempdir; | |||||

| use globset::{Glob, GlobSetBuilder}; | use globset::{Glob, GlobSetBuilder}; | ||||

| use config::Config; | use config::Config; | ||||

| @@ -387,7 +422,7 @@ Hello world | |||||

| #[test] | #[test] | ||||

| fn page_with_assets_gets_right_info() { | fn page_with_assets_gets_right_info() { | ||||

| let tmp_dir = TempDir::new("example").expect("create temp dir"); | |||||

| let tmp_dir = tempdir().expect("create temp dir"); | |||||

| let path = tmp_dir.path(); | let path = tmp_dir.path(); | ||||

| create_dir(&path.join("content")).expect("create content temp dir"); | create_dir(&path.join("content")).expect("create content temp dir"); | ||||

| create_dir(&path.join("content").join("posts")).expect("create posts temp dir"); | create_dir(&path.join("content").join("posts")).expect("create posts temp dir"); | ||||

| @@ -401,7 +436,7 @@ Hello world | |||||

| let res = Page::from_file( | let res = Page::from_file( | ||||

| nested_path.join("index.md").as_path(), | nested_path.join("index.md").as_path(), | ||||

| &Config::default() | |||||

| &Config::default(), | |||||

| ); | ); | ||||

| assert!(res.is_ok()); | assert!(res.is_ok()); | ||||

| let page = res.unwrap(); | let page = res.unwrap(); | ||||

| @@ -413,7 +448,7 @@ Hello world | |||||

| #[test] | #[test] | ||||

| fn page_with_assets_and_slug_overrides_path() { | fn page_with_assets_and_slug_overrides_path() { | ||||

| let tmp_dir = TempDir::new("example").expect("create temp dir"); | |||||

| let tmp_dir = tempdir().expect("create temp dir"); | |||||

| let path = tmp_dir.path(); | let path = tmp_dir.path(); | ||||

| create_dir(&path.join("content")).expect("create content temp dir"); | create_dir(&path.join("content")).expect("create content temp dir"); | ||||

| create_dir(&path.join("content").join("posts")).expect("create posts temp dir"); | create_dir(&path.join("content").join("posts")).expect("create posts temp dir"); | ||||

| @@ -427,7 +462,7 @@ Hello world | |||||

| let res = Page::from_file( | let res = Page::from_file( | ||||

| nested_path.join("index.md").as_path(), | nested_path.join("index.md").as_path(), | ||||

| &Config::default() | |||||

| &Config::default(), | |||||

| ); | ); | ||||

| assert!(res.is_ok()); | assert!(res.is_ok()); | ||||

| let page = res.unwrap(); | let page = res.unwrap(); | ||||

| @@ -439,7 +474,7 @@ Hello world | |||||

| #[test] | #[test] | ||||

| fn page_with_ignored_assets_filters_out_correct_files() { | fn page_with_ignored_assets_filters_out_correct_files() { | ||||

| let tmp_dir = TempDir::new("example").expect("create temp dir"); | |||||

| let tmp_dir = tempdir().expect("create temp dir"); | |||||

| let path = tmp_dir.path(); | let path = tmp_dir.path(); | ||||

| create_dir(&path.join("content")).expect("create content temp dir"); | create_dir(&path.join("content")).expect("create content temp dir"); | ||||

| create_dir(&path.join("content").join("posts")).expect("create posts temp dir"); | create_dir(&path.join("content").join("posts")).expect("create posts temp dir"); | ||||

| @@ -458,7 +493,7 @@ Hello world | |||||

| let res = Page::from_file( | let res = Page::from_file( | ||||

| nested_path.join("index.md").as_path(), | nested_path.join("index.md").as_path(), | ||||

| &config | |||||

| &config, | |||||

| ); | ); | ||||

| assert!(res.is_ok()); | assert!(res.is_ok()); | ||||

+ 9

- 6

components/content/src/section.rs

View File

| @@ -11,7 +11,7 @@ use errors::{Result, ResultExt}; | |||||

| use utils::fs::read_file; | use utils::fs::read_file; | ||||

| use utils::templates::render_template; | use utils::templates::render_template; | ||||

| use utils::site::get_reading_analytics; | use utils::site::get_reading_analytics; | ||||

| use rendering::{Context, Header, markdown_to_html}; | |||||

| use rendering::{RenderContext, Header, render_content}; | |||||

| use page::Page; | use page::Page; | ||||

| use file_info::FileInfo; | use file_info::FileInfo; | ||||

| @@ -91,22 +91,25 @@ impl Section { | |||||

| return "index.html".to_string(); | return "index.html".to_string(); | ||||

| } | } | ||||

| "section.html".to_string() | "section.html".to_string() | ||||

| }, | |||||

| } | |||||

| } | } | ||||

| } | } | ||||

| /// We need access to all pages url to render links relative to content | /// We need access to all pages url to render links relative to content | ||||

| /// so that can't happen at the same time as parsing | /// so that can't happen at the same time as parsing | ||||

| pub fn render_markdown(&mut self, permalinks: &HashMap<String, String>, tera: &Tera, config: &Config) -> Result<()> { | pub fn render_markdown(&mut self, permalinks: &HashMap<String, String>, tera: &Tera, config: &Config) -> Result<()> { | ||||

| let context = Context::new( | |||||

| let mut context = RenderContext::new( | |||||

| tera, | tera, | ||||

| config.highlight_code, | |||||

| config.highlight_theme.clone(), | |||||

| config, | |||||

| &self.permalink, | &self.permalink, | ||||

| permalinks, | permalinks, | ||||

| self.meta.insert_anchor_links, | self.meta.insert_anchor_links, | ||||

| ); | ); | ||||

| let res = markdown_to_html(&self.raw_content, &context)?; | |||||

| context.tera_context.add("section", self); | |||||

| let res = render_content(&self.raw_content, &context) | |||||

| .chain_err(|| format!("Failed to render content of {}", self.file.path.display()))?; | |||||

| self.content = res.0; | self.content = res.0; | ||||

| self.toc = res.1; | self.toc = res.1; | ||||

| Ok(()) | Ok(()) | ||||

+ 57

- 126

components/content/src/sorting.rs

View File

| @@ -7,11 +7,11 @@ use front_matter::SortBy; | |||||

| /// Sort pages by the given criteria | /// Sort pages by the given criteria | ||||

| /// | /// | ||||

| /// Any pages that doesn't have a the required field when the sorting method is other than none | |||||

| /// Any pages that doesn't have a required field when the sorting method is other than none | |||||

| /// will be ignored. | /// will be ignored. | ||||

| pub fn sort_pages(pages: Vec<Page>, sort_by: SortBy) -> (Vec<Page>, Vec<Page>) { | pub fn sort_pages(pages: Vec<Page>, sort_by: SortBy) -> (Vec<Page>, Vec<Page>) { | ||||

| if sort_by == SortBy::None { | if sort_by == SortBy::None { | ||||

| return (pages, vec![]); | |||||

| return (pages, vec![]); | |||||

| } | } | ||||

| let (mut can_be_sorted, cannot_be_sorted): (Vec<_>, Vec<_>) = pages | let (mut can_be_sorted, cannot_be_sorted): (Vec<_>, Vec<_>) = pages | ||||

| @@ -19,7 +19,6 @@ pub fn sort_pages(pages: Vec<Page>, sort_by: SortBy) -> (Vec<Page>, Vec<Page>) { | |||||

| .partition(|page| { | .partition(|page| { | ||||

| match sort_by { | match sort_by { | ||||

| SortBy::Date => page.meta.date.is_some(), | SortBy::Date => page.meta.date.is_some(), | ||||

| SortBy::Order => page.meta.order.is_some(), | |||||

| SortBy::Weight => page.meta.weight.is_some(), | SortBy::Weight => page.meta.weight.is_some(), | ||||

| _ => unreachable!() | _ => unreachable!() | ||||

| } | } | ||||

| @@ -35,17 +34,7 @@ pub fn sort_pages(pages: Vec<Page>, sort_by: SortBy) -> (Vec<Page>, Vec<Page>) { | |||||

| ord | ord | ||||

| } | } | ||||

| }) | }) | ||||

| }, | |||||

| SortBy::Order => { | |||||

| can_be_sorted.par_sort_unstable_by(|a, b| { | |||||

| let ord = b.meta.order().cmp(&a.meta.order()); | |||||

| if ord == Ordering::Equal { | |||||

| a.permalink.cmp(&b.permalink) | |||||

| } else { | |||||

| ord | |||||

| } | |||||

| }) | |||||

| }, | |||||

| } | |||||

| SortBy::Weight => { | SortBy::Weight => { | ||||

| can_be_sorted.par_sort_unstable_by(|a, b| { | can_be_sorted.par_sort_unstable_by(|a, b| { | ||||

| let ord = a.meta.weight().cmp(&b.meta.weight()); | let ord = a.meta.weight().cmp(&b.meta.weight()); | ||||

| @@ -55,7 +44,7 @@ pub fn sort_pages(pages: Vec<Page>, sort_by: SortBy) -> (Vec<Page>, Vec<Page>) { | |||||

| ord | ord | ||||

| } | } | ||||

| }) | }) | ||||

| }, | |||||

| } | |||||

| _ => unreachable!() | _ => unreachable!() | ||||

| }; | }; | ||||

| @@ -64,7 +53,7 @@ pub fn sort_pages(pages: Vec<Page>, sort_by: SortBy) -> (Vec<Page>, Vec<Page>) { | |||||

| /// Horribly inefficient way to set previous and next on each pages that skips drafts | /// Horribly inefficient way to set previous and next on each pages that skips drafts | ||||

| /// So many clones | /// So many clones | ||||

| pub fn populate_previous_and_next_pages(input: &[Page]) -> Vec<Page> { | |||||

| pub fn populate_siblings(input: &[Page], sort_by: SortBy) -> Vec<Page> { | |||||

| let mut res = Vec::with_capacity(input.len()); | let mut res = Vec::with_capacity(input.len()); | ||||

| // The input is already sorted | // The input is already sorted | ||||

| @@ -91,9 +80,20 @@ pub fn populate_previous_and_next_pages(input: &[Page]) -> Vec<Page> { | |||||

| // Remove prev/next otherwise we serialise the whole thing... | // Remove prev/next otherwise we serialise the whole thing... | ||||

| let mut next_page = input[j].clone(); | let mut next_page = input[j].clone(); | ||||

| next_page.previous = None; | |||||

| next_page.next = None; | |||||

| new_page.next = Some(Box::new(next_page)); | |||||

| match sort_by { | |||||

| SortBy::Weight => { | |||||

| next_page.lighter = None; | |||||

| next_page.heavier = None; | |||||

| new_page.lighter = Some(Box::new(next_page)); | |||||

| } | |||||

| SortBy::Date => { | |||||

| next_page.earlier = None; | |||||

| next_page.later = None; | |||||

| new_page.later = Some(Box::new(next_page)); | |||||

| } | |||||

| SortBy::None => () | |||||

| } | |||||

| break; | break; | ||||

| } | } | ||||

| } | } | ||||

| @@ -113,9 +113,19 @@ pub fn populate_previous_and_next_pages(input: &[Page]) -> Vec<Page> { | |||||

| // Remove prev/next otherwise we serialise the whole thing... | // Remove prev/next otherwise we serialise the whole thing... | ||||

| let mut previous_page = input[j].clone(); | let mut previous_page = input[j].clone(); | ||||

| previous_page.previous = None; | |||||

| previous_page.next = None; | |||||

| new_page.previous = Some(Box::new(previous_page)); | |||||

| match sort_by { | |||||

| SortBy::Weight => { | |||||

| previous_page.lighter = None; | |||||

| previous_page.heavier = None; | |||||

| new_page.heavier = Some(Box::new(previous_page)); | |||||

| } | |||||

| SortBy::Date => { | |||||

| previous_page.earlier = None; | |||||

| previous_page.later = None; | |||||

| new_page.earlier = Some(Box::new(previous_page)); | |||||

| } | |||||

| SortBy::None => {} | |||||

| } | |||||

| break; | break; | ||||

| } | } | ||||

| } | } | ||||

| @@ -129,7 +139,7 @@ pub fn populate_previous_and_next_pages(input: &[Page]) -> Vec<Page> { | |||||

| mod tests { | mod tests { | ||||

| use front_matter::{PageFrontMatter, SortBy}; | use front_matter::{PageFrontMatter, SortBy}; | ||||

| use page::Page; | use page::Page; | ||||

| use super::{sort_pages, populate_previous_and_next_pages}; | |||||

| use super::{sort_pages, populate_siblings}; | |||||

| fn create_page_with_date(date: &str) -> Page { | fn create_page_with_date(date: &str) -> Page { | ||||

| let mut front_matter = PageFrontMatter::default(); | let mut front_matter = PageFrontMatter::default(); | ||||

| @@ -137,22 +147,6 @@ mod tests { | |||||

| Page::new("content/hello.md", front_matter) | Page::new("content/hello.md", front_matter) | ||||

| } | } | ||||

| fn create_page_with_order(order: usize, filename: &str) -> Page { | |||||

| let mut front_matter = PageFrontMatter::default(); | |||||

| front_matter.order = Some(order); | |||||

| let mut p = Page::new("content/".to_string() + filename, front_matter); | |||||

| // Faking a permalink to test sorting with equal order | |||||

| p.permalink = filename.to_string(); | |||||

| p | |||||

| } | |||||

| fn create_draft_page_with_order(order: usize) -> Page { | |||||

| let mut front_matter = PageFrontMatter::default(); | |||||

| front_matter.order = Some(order); | |||||

| front_matter.draft = true; | |||||

| Page::new("content/hello.md", front_matter) | |||||

| } | |||||

| fn create_page_with_weight(weight: usize) -> Page { | fn create_page_with_weight(weight: usize) -> Page { | ||||

| let mut front_matter = PageFrontMatter::default(); | let mut front_matter = PageFrontMatter::default(); | ||||

| front_matter.weight = Some(weight); | front_matter.weight = Some(weight); | ||||

| @@ -173,37 +167,6 @@ mod tests { | |||||

| assert_eq!(pages[2].clone().meta.date.unwrap().to_string(), "2017-01-01"); | assert_eq!(pages[2].clone().meta.date.unwrap().to_string(), "2017-01-01"); | ||||

| } | } | ||||

| #[test] | |||||

| fn can_sort_by_order() { | |||||

| let input = vec![ | |||||

| create_page_with_order(2, "hello.md"), | |||||

| create_page_with_order(3, "hello2.md"), | |||||

| create_page_with_order(1, "hello3.md"), | |||||

| ]; | |||||

| let (pages, _) = sort_pages(input, SortBy::Order); | |||||

| // Should be sorted by order | |||||

| assert_eq!(pages[0].clone().meta.order.unwrap(), 3); | |||||

| assert_eq!(pages[1].clone().meta.order.unwrap(), 2); | |||||

| assert_eq!(pages[2].clone().meta.order.unwrap(), 1); | |||||

| } | |||||

| #[test] | |||||

| fn can_sort_by_order_uses_permalink_to_break_ties() { | |||||

| let input = vec![ | |||||

| create_page_with_order(3, "b.md"), | |||||

| create_page_with_order(3, "a.md"), | |||||

| create_page_with_order(3, "c.md"), | |||||

| ]; | |||||

| let (pages, _) = sort_pages(input, SortBy::Order); | |||||

| // Should be sorted by order | |||||

| assert_eq!(pages[0].clone().meta.order.unwrap(), 3); | |||||

| assert_eq!(pages[0].clone().permalink, "a.md"); | |||||

| assert_eq!(pages[1].clone().meta.order.unwrap(), 3); | |||||

| assert_eq!(pages[1].clone().permalink, "b.md"); | |||||

| assert_eq!(pages[2].clone().meta.order.unwrap(), 3); | |||||

| assert_eq!(pages[2].clone().permalink, "c.md"); | |||||

| } | |||||

| #[test] | #[test] | ||||

| fn can_sort_by_weight() { | fn can_sort_by_weight() { | ||||

| let input = vec![ | let input = vec![ | ||||

| @@ -221,80 +184,48 @@ mod tests { | |||||

| #[test] | #[test] | ||||

| fn can_sort_by_none() { | fn can_sort_by_none() { | ||||

| let input = vec![ | let input = vec![ | ||||

| create_page_with_order(2, "a.md"), | |||||

| create_page_with_order(3, "a.md"), | |||||

| create_page_with_order(1, "a.md"), | |||||

| create_page_with_weight(2), | |||||

| create_page_with_weight(3), | |||||

| create_page_with_weight(1), | |||||

| ]; | ]; | ||||

| let (pages, _) = sort_pages(input, SortBy::None); | let (pages, _) = sort_pages(input, SortBy::None); | ||||

| // Should be sorted by date | |||||

| assert_eq!(pages[0].clone().meta.order.unwrap(), 2); | |||||

| assert_eq!(pages[1].clone().meta.order.unwrap(), 3); | |||||

| assert_eq!(pages[2].clone().meta.order.unwrap(), 1); | |||||

| assert_eq!(pages[0].clone().meta.weight.unwrap(), 2); | |||||

| assert_eq!(pages[1].clone().meta.weight.unwrap(), 3); | |||||

| assert_eq!(pages[2].clone().meta.weight.unwrap(), 1); | |||||

| } | } | ||||

| #[test] | #[test] | ||||

| fn ignore_page_with_missing_field() { | fn ignore_page_with_missing_field() { | ||||

| let input = vec![ | let input = vec![ | ||||

| create_page_with_order(2, "a.md"), | |||||

| create_page_with_order(3, "a.md"), | |||||

| create_page_with_weight(2), | |||||

| create_page_with_weight(3), | |||||

| create_page_with_date("2019-01-01"), | create_page_with_date("2019-01-01"), | ||||

| ]; | ]; | ||||

| let (pages, unsorted) = sort_pages(input, SortBy::Order); | |||||

| let (pages, unsorted) = sort_pages(input, SortBy::Weight); | |||||

| assert_eq!(pages.len(), 2); | assert_eq!(pages.len(), 2); | ||||

| assert_eq!(unsorted.len(), 1); | assert_eq!(unsorted.len(), 1); | ||||

| } | } | ||||

| #[test] | #[test] | ||||

| fn can_populate_previous_and_next_pages() { | |||||

| let input = vec![ | |||||

| create_page_with_order(1, "a.md"), | |||||

| create_page_with_order(2, "b.md"), | |||||

| create_page_with_order(3, "a.md"), | |||||

| ]; | |||||

| let pages = populate_previous_and_next_pages(&input); | |||||

| assert!(pages[0].clone().next.is_none()); | |||||

| assert!(pages[0].clone().previous.is_some()); | |||||

| assert_eq!(pages[0].clone().previous.unwrap().meta.order.unwrap(), 2); | |||||

| assert!(pages[1].clone().next.is_some()); | |||||

| assert!(pages[1].clone().previous.is_some()); | |||||

| assert_eq!(pages[1].clone().previous.unwrap().meta.order.unwrap(), 3); | |||||

| assert_eq!(pages[1].clone().next.unwrap().meta.order.unwrap(), 1); | |||||

| assert!(pages[2].clone().next.is_some()); | |||||

| assert!(pages[2].clone().previous.is_none()); | |||||

| assert_eq!(pages[2].clone().next.unwrap().meta.order.unwrap(), 2); | |||||

| } | |||||

| #[test] | |||||

| fn can_populate_previous_and_next_pages_skip_drafts() { | |||||

| fn can_populate_siblings() { | |||||

| let input = vec![ | let input = vec![ | ||||

| create_draft_page_with_order(0), | |||||

| create_page_with_order(1, "a.md"), | |||||

| create_page_with_order(2, "b.md"), | |||||

| create_page_with_order(3, "c.md"), | |||||

| create_draft_page_with_order(4), | |||||

| create_page_with_weight(1), | |||||

| create_page_with_weight(2), | |||||

| create_page_with_weight(3), | |||||

| ]; | ]; | ||||

| let pages = populate_previous_and_next_pages(&input); | |||||

| assert!(pages[0].clone().next.is_none()); | |||||

| assert!(pages[0].clone().previous.is_none()); | |||||

| assert!(pages[1].clone().next.is_none()); | |||||

| assert!(pages[1].clone().previous.is_some()); | |||||

| assert_eq!(pages[1].clone().previous.unwrap().meta.order.unwrap(), 2); | |||||

| let pages = populate_siblings(&input, SortBy::Weight); | |||||

| assert!(pages[2].clone().next.is_some()); | |||||

| assert!(pages[2].clone().previous.is_some()); | |||||

| assert_eq!(pages[2].clone().previous.unwrap().meta.order.unwrap(), 3); | |||||

| assert_eq!(pages[2].clone().next.unwrap().meta.order.unwrap(), 1); | |||||

| assert!(pages[0].clone().lighter.is_none()); | |||||

| assert!(pages[0].clone().heavier.is_some()); | |||||

| assert_eq!(pages[0].clone().heavier.unwrap().meta.weight.unwrap(), 2); | |||||

| assert!(pages[3].clone().next.is_some()); | |||||

| assert!(pages[3].clone().previous.is_none()); | |||||

| assert_eq!(pages[3].clone().next.unwrap().meta.order.unwrap(), 2); | |||||

| assert!(pages[1].clone().heavier.is_some()); | |||||

| assert!(pages[1].clone().lighter.is_some()); | |||||

| assert_eq!(pages[1].clone().lighter.unwrap().meta.weight.unwrap(), 1); | |||||

| assert_eq!(pages[1].clone().heavier.unwrap().meta.weight.unwrap(), 3); | |||||

| assert!(pages[4].clone().next.is_none()); | |||||

| assert!(pages[4].clone().previous.is_none()); | |||||

| assert!(pages[2].clone().lighter.is_some()); | |||||

| assert!(pages[2].clone().heavier.is_none()); | |||||

| assert_eq!(pages[2].clone().lighter.unwrap().meta.weight.unwrap(), 2); | |||||

| } | } | ||||

| } | } | ||||

+ 2

- 1

components/errors/Cargo.toml

View File

| @@ -4,6 +4,7 @@ version = "0.1.0" | |||||

| authors = ["Vincent Prouillet <prouillet.vincent@gmail.com>"] | authors = ["Vincent Prouillet <prouillet.vincent@gmail.com>"] | ||||

| [dependencies] | [dependencies] | ||||

| error-chain = "0.11" | |||||

| error-chain = "0.12" | |||||

| tera = "0.11" | tera = "0.11" | ||||

| toml = "0.4" | toml = "0.4" | ||||

| image = "0.19.0" | |||||

+ 3

- 1

components/errors/src/lib.rs

View File

| @@ -1,9 +1,10 @@ | |||||

| #![allow(unused_doc_comment)] | |||||

| #![allow(unused_doc_comments)] | |||||

| #[macro_use] | #[macro_use] | ||||

| extern crate error_chain; | extern crate error_chain; | ||||

| extern crate tera; | extern crate tera; | ||||

| extern crate toml; | extern crate toml; | ||||

| extern crate image; | |||||

| error_chain! { | error_chain! { | ||||

| errors {} | errors {} | ||||

| @@ -15,6 +16,7 @@ error_chain! { | |||||

| foreign_links { | foreign_links { | ||||

| Io(::std::io::Error); | Io(::std::io::Error); | ||||

| Toml(toml::de::Error); | Toml(toml::de::Error); | ||||

| Image(image::ImageError); | |||||

| } | } | ||||

| } | } | ||||

+ 0

- 1

components/front_matter/Cargo.toml

View File

| @@ -12,5 +12,4 @@ toml = "0.4" | |||||

| regex = "1" | regex = "1" | ||||

| lazy_static = "1" | lazy_static = "1" | ||||

| errors = { path = "../errors" } | errors = { path = "../errors" } | ||||

+ 0

- 3

components/front_matter/src/lib.rs

View File

| @@ -30,8 +30,6 @@ lazy_static! { | |||||

| pub enum SortBy { | pub enum SortBy { | ||||

| /// Most recent to oldest | /// Most recent to oldest | ||||

| Date, | Date, | ||||

| /// Lower order comes last | |||||

| Order, | |||||

| /// Lower weight comes first | /// Lower weight comes first | ||||

| Weight, | Weight, | ||||

| /// No sorting | /// No sorting | ||||

| @@ -151,5 +149,4 @@ date = 2002-10-12"#; | |||||

| let res = split_page_content(Path::new(""), content); | let res = split_page_content(Path::new(""), content); | ||||

| assert!(res.is_err()); | assert!(res.is_err()); | ||||

| } | } | ||||

| } | } | ||||

+ 25

- 50

components/front_matter/src/page.rs

View File

| @@ -1,4 +1,5 @@ | |||||

| use std::result::{Result as StdResult}; | |||||

| use std::collections::HashMap; | |||||

| use std::result::Result as StdResult; | |||||

| use chrono::prelude::*; | use chrono::prelude::*; | ||||

| use tera::{Map, Value}; | use tera::{Map, Value}; | ||||

| @@ -21,7 +22,7 @@ fn from_toml_datetime<'de, D>(deserializer: D) -> StdResult<Option<String>, D::E | |||||

| fn convert_toml_date(table: Map<String, Value>) -> Value { | fn convert_toml_date(table: Map<String, Value>) -> Value { | ||||

| let mut new = Map::new(); | let mut new = Map::new(); | ||||

| for (k, v) in table.into_iter() { | |||||

| for (k, v) in table { | |||||

| if k == "$__toml_private_datetime" { | if k == "$__toml_private_datetime" { | ||||

| return v; | return v; | ||||

| } | } | ||||

| @@ -34,7 +35,7 @@ fn convert_toml_date(table: Map<String, Value>) -> Value { | |||||

| return Value::Object(new); | return Value::Object(new); | ||||

| } | } | ||||

| new.insert(k, convert_toml_date(o)); | new.insert(k, convert_toml_date(o)); | ||||

| }, | |||||

| } | |||||

| _ => { new.insert(k, v); } | _ => { new.insert(k, v); } | ||||

| } | } | ||||

| } | } | ||||

| @@ -51,8 +52,8 @@ fn fix_toml_dates(table: Map<String, Value>) -> Value { | |||||

| match value { | match value { | ||||

| Value::Object(mut o) => { | Value::Object(mut o) => { | ||||

| new.insert(key, convert_toml_date(o)); | new.insert(key, convert_toml_date(o)); | ||||

| }, | |||||

| _ => { new.insert(key, value); }, | |||||

| } | |||||

| _ => { new.insert(key, value); } | |||||

| } | } | ||||

| } | } | ||||

| @@ -80,10 +81,7 @@ pub struct PageFrontMatter { | |||||

| /// otherwise is set after parsing front matter and sections | /// otherwise is set after parsing front matter and sections | ||||

| /// Can't be an empty string if present | /// Can't be an empty string if present | ||||

| pub path: Option<String>, | pub path: Option<String>, | ||||

| /// Tags, not to be confused with categories | |||||

| pub tags: Option<Vec<String>>, | |||||

| /// Only one category allowed. Can't be an empty string if present | |||||

| pub category: Option<String>, | |||||

| pub taxonomies: HashMap<String, Vec<String>>, | |||||

| /// Integer to use to order content. Lowest is at the bottom, highest first | /// Integer to use to order content. Lowest is at the bottom, highest first | ||||

| pub order: Option<usize>, | pub order: Option<usize>, | ||||

| /// Integer to use to order content. Highest is at the bottom, lowest first | /// Integer to use to order content. Highest is at the bottom, lowest first | ||||

| @@ -122,12 +120,6 @@ impl PageFrontMatter { | |||||

| } | } | ||||

| } | } | ||||

| if let Some(ref category) = f.category { | |||||

| if category == "" { | |||||

| bail!("`category` can't be empty if present") | |||||

| } | |||||

| } | |||||

| f.extra = match fix_toml_dates(f.extra) { | f.extra = match fix_toml_dates(f.extra) { | ||||

| Value::Object(o) => o, | Value::Object(o) => o, | ||||

| _ => unreachable!("Got something other than a table in page extra"), | _ => unreachable!("Got something other than a table in page extra"), | ||||

| @@ -155,13 +147,6 @@ impl PageFrontMatter { | |||||

| pub fn weight(&self) -> usize { | pub fn weight(&self) -> usize { | ||||

| self.weight.unwrap() | self.weight.unwrap() | ||||

| } | } | ||||

| pub fn has_tags(&self) -> bool { | |||||

| match self.tags { | |||||

| Some(ref t) => !t.is_empty(), | |||||

| None => false | |||||

| } | |||||

| } | |||||

| } | } | ||||

| impl Default for PageFrontMatter { | impl Default for PageFrontMatter { | ||||

| @@ -173,8 +158,7 @@ impl Default for PageFrontMatter { | |||||

| draft: false, | draft: false, | ||||

| slug: None, | slug: None, | ||||

| path: None, | path: None, | ||||

| tags: None, | |||||

| category: None, | |||||

| taxonomies: HashMap::new(), | |||||

| order: None, | order: None, | ||||

| weight: None, | weight: None, | ||||

| aliases: Vec::new(), | aliases: Vec::new(), | ||||

| @@ -211,21 +195,6 @@ mod tests { | |||||

| assert_eq!(res.description.unwrap(), "hey there".to_string()) | assert_eq!(res.description.unwrap(), "hey there".to_string()) | ||||

| } | } | ||||

| #[test] | |||||

| fn can_parse_tags() { | |||||

| let content = r#" | |||||

| title = "Hello" | |||||

| description = "hey there" | |||||

| slug = "hello-world" | |||||

| tags = ["rust", "html"]"#; | |||||

| let res = PageFrontMatter::parse(content); | |||||

| assert!(res.is_ok()); | |||||

| let res = res.unwrap(); | |||||

| assert_eq!(res.title.unwrap(), "Hello".to_string()); | |||||

| assert_eq!(res.slug.unwrap(), "hello-world".to_string()); | |||||

| assert_eq!(res.tags.unwrap(), ["rust".to_string(), "html".to_string()]); | |||||

| } | |||||

| #[test] | #[test] | ||||

| fn errors_with_invalid_front_matter() { | fn errors_with_invalid_front_matter() { | ||||

| @@ -234,17 +203,6 @@ mod tests { | |||||

| assert!(res.is_err()); | assert!(res.is_err()); | ||||

| } | } | ||||

| #[test] | |||||

| fn errors_on_non_string_tag() { | |||||

| let content = r#" | |||||

| title = "Hello" | |||||

| description = "hey there" | |||||

| slug = "hello-world" | |||||

| tags = ["rust", 1]"#; | |||||

| let res = PageFrontMatter::parse(content); | |||||

| assert!(res.is_err()); | |||||

| } | |||||

| #[test] | #[test] | ||||

| fn errors_on_present_but_empty_slug() { | fn errors_on_present_but_empty_slug() { | ||||

| let content = r#" | let content = r#" | ||||

| @@ -344,4 +302,21 @@ mod tests { | |||||

| assert!(res.is_ok()); | assert!(res.is_ok()); | ||||

| assert_eq!(res.unwrap().extra["something"]["some-date"], to_value("2002-14-01").unwrap()); | assert_eq!(res.unwrap().extra["something"]["some-date"], to_value("2002-14-01").unwrap()); | ||||

| } | } | ||||

| #[test] | |||||

| fn can_parse_taxonomies() { | |||||

| let content = r#" | |||||

| title = "Hello World" | |||||

| [taxonomies] | |||||

| tags = ["Rust", "JavaScript"] | |||||

| categories = ["Dev"] | |||||

| "#; | |||||

| let res = PageFrontMatter::parse(content); | |||||

| println!("{:?}", res); | |||||

| assert!(res.is_ok()); | |||||

| let res2 = res.unwrap(); | |||||

| assert_eq!(res2.taxonomies["categories"], vec!["Dev"]); | |||||

| assert_eq!(res2.taxonomies["tags"], vec!["Rust", "JavaScript"]); | |||||

| } | |||||

| } | } | ||||

components/rendering/examples/generate_sublime.rs → components/highlighting/examples/generate_sublime.rs

View File

+ 16

- 3

components/highlighting/src/lib.rs

View File

| @@ -4,9 +4,10 @@ extern crate syntect; | |||||

| use syntect::dumps::from_binary; | use syntect::dumps::from_binary; | ||||

| use syntect::parsing::SyntaxSet; | use syntect::parsing::SyntaxSet; | ||||

| use syntect::highlighting::ThemeSet; | |||||

| use syntect::highlighting::{ThemeSet, Theme}; | |||||

| use syntect::easy::HighlightLines; | |||||

| thread_local!{ | |||||

| thread_local! { | |||||

| pub static SYNTAX_SET: SyntaxSet = { | pub static SYNTAX_SET: SyntaxSet = { | ||||

| let mut ss: SyntaxSet = from_binary(include_bytes!("../../../sublime_syntaxes/newlines.packdump")); | let mut ss: SyntaxSet = from_binary(include_bytes!("../../../sublime_syntaxes/newlines.packdump")); | ||||

| ss.link_syntaxes(); | ss.link_syntaxes(); | ||||

| @@ -14,6 +15,18 @@ thread_local!{ | |||||

| }; | }; | ||||

| } | } | ||||

| lazy_static!{ | |||||

| lazy_static! { | |||||

| pub static ref THEME_SET: ThemeSet = from_binary(include_bytes!("../../../sublime_themes/all.themedump")); | pub static ref THEME_SET: ThemeSet = from_binary(include_bytes!("../../../sublime_themes/all.themedump")); | ||||

| } | } | ||||

| pub fn get_highlighter<'a>(theme: &'a Theme, info: &str) -> HighlightLines<'a> { | |||||

| SYNTAX_SET.with(|ss| { | |||||

| let syntax = info | |||||

| .split(' ') | |||||

| .next() | |||||

| .and_then(|lang| ss.find_syntax_by_token(lang)) | |||||

| .unwrap_or_else(|| ss.find_syntax_plain_text()); | |||||

| HighlightLines::new(syntax, theme) | |||||

| }) | |||||

| } | |||||

+ 14

- 0

components/imageproc/Cargo.toml

View File

| @@ -0,0 +1,14 @@ | |||||

| [package] | |||||

| name = "imageproc" | |||||

| version = "0.1.0" | |||||

| authors = ["Vojtěch Král <vojtech@kral.hk>"] | |||||

| [dependencies] | |||||

| lazy_static = "1" | |||||

| regex = "1.0" | |||||

| tera = "0.11" | |||||

| image = "0.19" | |||||

| rayon = "1" | |||||

| errors = { path = "../errors" } | |||||

| utils = { path = "../utils" } | |||||

+ 384

- 0

components/imageproc/src/lib.rs

View File

| @@ -0,0 +1,384 @@ | |||||

| #[macro_use] | |||||

| extern crate lazy_static; | |||||

| extern crate regex; | |||||

| extern crate image; | |||||

| extern crate rayon; | |||||

| extern crate utils; | |||||

| extern crate errors; | |||||

| use std::path::{Path, PathBuf}; | |||||

| use std::hash::{Hash, Hasher}; | |||||

| use std::collections::HashMap; | |||||

| use std::collections::hash_map::Entry as HEntry; | |||||

| use std::collections::hash_map::DefaultHasher; | |||||

| use std::fs::{self, File}; | |||||

| use regex::Regex; | |||||

| use image::{GenericImage, FilterType}; | |||||

| use image::jpeg::JPEGEncoder; | |||||

| use rayon::prelude::*; | |||||

| use utils::fs as ufs; | |||||

| use errors::{Result, ResultExt}; | |||||

| static RESIZED_SUBDIR: &'static str = "_processed_images"; | |||||

| lazy_static! { | |||||

| pub static ref RESIZED_FILENAME: Regex = Regex::new(r#"([0-9a-f]{16})([0-9a-f]{2})[.]jpg"#).unwrap(); | |||||

| } | |||||

| /// Describes the precise kind of a resize operation | |||||

| #[derive(Debug, Clone, Copy, PartialEq, Eq)] | |||||

| pub enum ResizeOp { | |||||

| /// A simple scale operation that doesn't take aspect ratio into account | |||||

| Scale(u32, u32), | |||||

| /// Scales the image to a specified width with height computed such | |||||

| /// that aspect ratio is preserved | |||||

| FitWidth(u32), | |||||

| /// Scales the image to a specified height with width computed such | |||||

| /// that aspect ratio is preserved | |||||

| FitHeight(u32), | |||||

| /// Scales the image such that it fits within the specified width and | |||||

| /// height preserving aspect ratio. | |||||

| /// Either dimension may end up being smaller, but never larger than specified. | |||||

| Fit(u32, u32), | |||||

| /// Scales the image such that it fills the specified width and height. | |||||

| /// Output will always have the exact dimensions specified. | |||||

| /// The part of the image that doesn't fit in the thumbnail due to differing | |||||

| /// aspect ratio will be cropped away, if any. | |||||

| Fill(u32, u32), | |||||

| } | |||||

| impl ResizeOp { | |||||

| pub fn from_args(op: &str, width: Option<u32>, height: Option<u32>) -> Result<ResizeOp> { | |||||

| use ResizeOp::*; | |||||

| // Validate args: | |||||

| match op { | |||||

| "fit_width" => if width.is_none() { | |||||

| return Err("op=\"fit_width\" requires a `width` argument".to_string().into()); | |||||

| }, | |||||

| "fit_height" => if height.is_none() { | |||||

| return Err("op=\"fit_height\" requires a `height` argument".to_string().into()); | |||||

| }, | |||||

| "scale" | "fit" | "fill" => if width.is_none() || height.is_none() { | |||||

| return Err(format!("op={} requires a `width` and `height` argument", op).into()); | |||||

| }, | |||||

| _ => return Err(format!("Invalid image resize operation: {}", op).into()) | |||||

| }; | |||||

| Ok(match op { | |||||

| "scale" => Scale(width.unwrap(), height.unwrap()), | |||||

| "fit_width" => FitWidth(width.unwrap()), | |||||

| "fit_height" => FitHeight(height.unwrap()), | |||||

| "fit" => Fit(width.unwrap(), height.unwrap()), | |||||

| "fill" => Fill(width.unwrap(), height.unwrap()), | |||||

| _ => unreachable!(), | |||||

| }) | |||||

| } | |||||

| pub fn width(self) -> Option<u32> { | |||||

| use ResizeOp::*; | |||||

| match self { | |||||

| Scale(w, _) => Some(w), | |||||

| FitWidth(w) => Some(w), | |||||

| FitHeight(_) => None, | |||||

| Fit(w, _) => Some(w), | |||||

| Fill(w, _) => Some(w), | |||||

| } | |||||

| } | |||||

| pub fn height(self) -> Option<u32> { | |||||

| use ResizeOp::*; | |||||

| match self { | |||||

| Scale(_, h) => Some(h), | |||||

| FitWidth(_) => None, | |||||

| FitHeight(h) => Some(h), | |||||

| Fit(_, h) => Some(h), | |||||

| Fill(_, h) => Some(h), | |||||

| } | |||||

| } | |||||

| } | |||||

| impl From<ResizeOp> for u8 { | |||||

| fn from(op: ResizeOp) -> u8 { | |||||

| use ResizeOp::*; | |||||

| match op { | |||||

| Scale(_, _) => 1, | |||||

| FitWidth(_) => 2, | |||||

| FitHeight(_) => 3, | |||||

| Fit(_, _) => 4, | |||||

| Fill(_, _) => 5, | |||||

| } | |||||

| } | |||||

| } | |||||

| impl Hash for ResizeOp { | |||||

| fn hash<H: Hasher>(&self, hasher: &mut H) { | |||||

| hasher.write_u8(u8::from(*self)); | |||||

| if let Some(w) = self.width() { hasher.write_u32(w); } | |||||

| if let Some(h) = self.height() { hasher.write_u32(h); } | |||||

| } | |||||

| } | |||||

| /// Holds all data needed to perform a resize operation | |||||

| #[derive(Debug, PartialEq, Eq)] | |||||

| pub struct ImageOp { | |||||

| source: String, | |||||

| op: ResizeOp, | |||||

| quality: u8, | |||||

| /// Hash of the above parameters | |||||

| hash: u64, | |||||

| /// If there is a hash collision with another ImageOp, this contains a sequential ID > 1 | |||||

| /// identifying the collision in the order as encountered (which is essentially random). | |||||

| /// Therefore, ImageOps with collisions (ie. collision_id > 0) are always considered out of date. | |||||

| /// Note that this is very unlikely to happen in practice | |||||

| collision_id: u32, | |||||

| } | |||||

| impl ImageOp { | |||||

| pub fn new(source: String, op: ResizeOp, quality: u8) -> ImageOp { | |||||

| let mut hasher = DefaultHasher::new(); | |||||

| hasher.write(source.as_ref()); | |||||

| op.hash(&mut hasher); | |||||

| hasher.write_u8(quality); | |||||

| let hash = hasher.finish(); | |||||

| ImageOp { source, op, quality, hash, collision_id: 0 } | |||||

| } | |||||

| pub fn from_args( | |||||

| source: String, | |||||

| op: &str, | |||||

| width: Option<u32>, | |||||

| height: Option<u32>, | |||||

| quality: u8, | |||||

| ) -> Result<ImageOp> { | |||||

| let op = ResizeOp::from_args(op, width, height)?; | |||||

| Ok(Self::new(source, op, quality)) | |||||

| } | |||||

| fn perform(&self, content_path: &Path, target_path: &Path) -> Result<()> { | |||||

| use ResizeOp::*; | |||||

| let src_path = content_path.join(&self.source); | |||||

| if !ufs::file_stale(&src_path, target_path) { | |||||

| return Ok(()); | |||||

| } | |||||

| let mut img = image::open(&src_path)?; | |||||

| let (img_w, img_h) = img.dimensions(); | |||||

| const RESIZE_FILTER: FilterType = FilterType::Gaussian; | |||||

| const RATIO_EPSILLION: f32 = 0.1; | |||||

| let img = match self.op { | |||||

| Scale(w, h) => img.resize_exact(w, h, RESIZE_FILTER), | |||||

| FitWidth(w) => img.resize(w, u32::max_value(), RESIZE_FILTER), | |||||

| FitHeight(h) => img.resize(u32::max_value(), h, RESIZE_FILTER), | |||||

| Fit(w, h) => img.resize(w, h, RESIZE_FILTER), | |||||

| Fill(w, h) => { | |||||

| let factor_w = img_w as f32 / w as f32; | |||||

| let factor_h = img_h as f32 / h as f32; | |||||

| if (factor_w - factor_h).abs() <= RATIO_EPSILLION { | |||||

| // If the horizontal and vertical factor is very similar, | |||||

| // that means the aspect is similar enough that there's not much point | |||||

| // in cropping, so just perform a simple scale in this case. | |||||

| img.resize_exact(w, h, RESIZE_FILTER) | |||||

| } else { | |||||

| // We perform the fill such that a crop is performed first | |||||

| // and then resize_exact can be used, which should be cheaper than | |||||

| // resizing and then cropping (smaller number of pixels to resize). | |||||

| let (crop_w, crop_h) = if factor_w < factor_h { | |||||

| (img_w, (factor_w * h as f32).round() as u32) | |||||

| } else { | |||||

| ((factor_h * w as f32).round() as u32, img_h) | |||||

| }; | |||||

| let (offset_w, offset_h) = if factor_w < factor_h { | |||||

| (0, (img_h - crop_h) / 2) | |||||

| } else { | |||||

| ((img_w - crop_w) / 2, 0) | |||||

| }; | |||||

| img.crop(offset_w, offset_h, crop_w, crop_h) | |||||

| .resize_exact(w, h, RESIZE_FILTER) | |||||

| } | |||||

| } | |||||

| }; | |||||

| let mut f = File::create(target_path)?; | |||||

| let mut enc = JPEGEncoder::new_with_quality(&mut f, self.quality); | |||||

| let (img_w, img_h) = img.dimensions(); | |||||

| enc.encode(&img.raw_pixels(), img_w, img_h, img.color())?; | |||||

| Ok(()) | |||||

| } | |||||

| } | |||||

| /// A strcture into which image operations can be enqueued and then performed. | |||||

| /// All output is written in a subdirectory in `static_path`, | |||||

| /// taking care of file stale status based on timestamps and possible hash collisions. | |||||

| #[derive(Debug)] | |||||

| pub struct Processor { | |||||

| content_path: PathBuf, | |||||

| resized_path: PathBuf, | |||||

| resized_url: String, | |||||

| /// A map of a ImageOps by their stored hash. | |||||

| /// Note that this cannot be a HashSet, because hashset handles collisions and we don't want that, | |||||

| /// we need to be aware of and handle collisions ourselves. | |||||

| img_ops: HashMap<u64, ImageOp>, | |||||

| /// Hash collisions go here: | |||||

| img_ops_collisions: Vec<ImageOp>, | |||||

| } | |||||

| impl Processor { | |||||

| pub fn new(content_path: PathBuf, static_path: &Path, base_url: &str) -> Processor { | |||||

| Processor { | |||||

| content_path, | |||||

| resized_path: static_path.join(RESIZED_SUBDIR), | |||||

| resized_url: Self::resized_url(base_url), | |||||

| img_ops: HashMap::new(), | |||||

| img_ops_collisions: Vec::new(), | |||||

| } | |||||

| } | |||||

| fn resized_url(base_url: &str) -> String { | |||||

| if base_url.ends_with('/') { | |||||

| format!("{}{}", base_url, RESIZED_SUBDIR) | |||||

| } else { | |||||

| format!("{}/{}", base_url, RESIZED_SUBDIR) | |||||

| } | |||||

| } | |||||

| pub fn set_base_url(&mut self, base_url: &str) { | |||||

| self.resized_url = Self::resized_url(base_url); | |||||

| } | |||||

| pub fn source_exists(&self, source: &str) -> bool { | |||||

| self.content_path.join(source).exists() | |||||

| } | |||||

| pub fn num_img_ops(&self) -> usize { | |||||

| self.img_ops.len() + self.img_ops_collisions.len() | |||||

| } | |||||

| fn insert_with_collisions(&mut self, mut img_op: ImageOp) -> u32 { | |||||

| match self.img_ops.entry(img_op.hash) { | |||||

| HEntry::Occupied(entry) => if *entry.get() == img_op { return 0; }, | |||||

| HEntry::Vacant(entry) => { | |||||

| entry.insert(img_op); | |||||

| return 0; | |||||

| } | |||||

| } | |||||

| // If we get here, that means a hash collision. | |||||